PydanticAI 中文指南

2025年3月6日

官方原版:PydanticAI

开源仓库页面:pydantic-ai

本文由AI翻译和排版,请辨别使用。本文只用于学习。

简介

PydanticAI 是一个专为 Python 设计的代理框架,旨在简化使用生成式 AI 构建生产级应用的过程,让开发者体验更加顺畅。

FastAPI 通过提供创新且人性化的设计,基于 Pydantic 的基石,革命性地推动了 Web 开发。这一设计不仅提升了开发效率,也使得 Web 开发变得更加便捷和高效。

类似地,几乎所有的 Python 代理框架和LLM库都采用了 Pydantic,但当我们开始使用 Pydantic Logfire 中的LLMs时,我们没有找到能给我们带来同样感觉的东西。

我们构建 PydanticAI 的初衷,就是将 FastAPI 的便捷性带到 GenAI 应用开发领域。

为什么选择 PydanticAI

- 由 Pydantic 团队打造:该团队也是 OpenAI SDK、Anthropic SDK、LangChain、LlamaIndex、AutoGPT、Transformers、CrewAI、Instructor 等众多项目的幕后力量。

- 支持 OpenAI、Anthropic、Gemini、Deepseek、Ollama、Groq、Cohere 和 Mistral 等模型,并提供了简单易用的接口,方便扩展对其他模型的支持。

- Pydantic Logfire 集成:无缝与 Pydantic Logfire 集成,为您的LLM-驱动应用程序提供实时调试、性能监控和行为跟踪,让您轻松实现应用调试和性能监控。

- 类型安全:旨在让类型检查尽可能强大且对您来说信息丰富,以提升您的体验。

- 以 Python 为中心的设计:利用 Python 熟悉的控制流和代理组合,轻松构建您的 AI 驱动项目。这使得您能够应用在任意其他(非 AI)项目中常用的标准 Python 最佳实践,使项目开发更加便捷。

- 利用 Pydantic 的力量验证和结构化模型输出,确保每次运行生成的响应保持一致。

- 依赖注入系统:提供可选的依赖注入功能,以便为您的智能体系统提示、工具和结果验证器提供数据和服务的功能。这种功能对于进行测试和基于评估的迭代开发特别有用,可以大大提高开发效率。

- 提供流式响应功能,可连续输出LLM信息,并即时验证,确保结果既快速又准确。

- Pydantic 图形支持通过类型提示定义强大的图,这在标准控制流退化成面条代码的复杂应用中非常有用。这种用法在处理复杂应用时尤其有用,因为它可以避免代码变得难以管理。

你好,世界

这里是一个 PydanticAI 的简单示例:

hello_world.py

from pydantic_ai import Agent

agent = Agent(

'google-gla:gemini-1.5-flash',

system_prompt='Be concise, reply with one sentence.',

)

result = agent.run_sync('Where does "hello world" come from?')

print(result.data)

"""

The first known use of "hello, world" was in a 1974 textbook about the C programming language.

"""

(本示例完整,可以直接运行)

交易过程应极为简洁:PydanticAI 将系统提示和用户查询发送至LLM,模型将给出文本回复。

目前内容还不够吸引人,但我们可以轻松地加入“工具”、动态系统提示和结构化响应,以构建更加强大的智能体。

工具与依赖注入示例

这里是一个使用 PydanticAI 构建银行客服代理的简洁示例:这是一个使用 PydanticAI 构建银行客服代理的示例,非常简洁。

bank_support.py

from dataclasses import dataclass

from pydantic import BaseModel, Field

from pydantic_ai import Agent, RunContext

from bank_database import DatabaseConn

@dataclass

class SupportDependencies:

customer_id: int

db: DatabaseConn

class SupportResult(BaseModel):

support_advice: str = Field(description='Advice returned to the customer')

block_card: bool = Field(description="Whether to block the customer's card")

risk: int = Field(description='Risk level of query', ge=0, le=10)

support_agent = Agent(

'openai:gpt-4o',

deps_type=SupportDependencies,

result_type=SupportResult,

system_prompt=(

'You are a support agent in our bank, give the '

'customer support and judge the risk level of their query.'

),

)

@support_agent.system_prompt

async def add_customer_name(ctx: RunContext[SupportDependencies]) -> str:

customer_name = await ctx.deps.db.customer_name(id=ctx.deps.customer_id)

return f"The customer's name is {customer_name!r}"

@support_agent.tool

async def customer_balance(

ctx: RunContext[SupportDependencies], include_pending: bool

) -> float:

"""Returns the customer's current account balance."""

return await ctx.deps.db.customer_balance(

id=ctx.deps.customer_id,

include_pending=include_pending,

)

...

async def main():

deps = SupportDependencies(customer_id=123, db=DatabaseConn())

result = await support_agent.run('What is my balance?', deps=deps)

print(result.data)

"""

support_advice='Hello John, your current account balance, including pending transactions, is $123.45.' block_card=False risk=1

"""

result = await support_agent.run('I just lost my card!', deps=deps)

print(result.data)

"""

support_advice="I'm sorry to hear that, John. We are temporarily blocking your card to prevent unauthorized transactions." block_card=True risk=8

"""

完整示例 bank_support.py 示例

为了简洁,这里展示的代码不完整( DatabaseConn 的定义缺失);您可以在以下链接中找到完整的 bank_support.py 示例。

使用 Pydantic Logfire 进行仪表化的内容

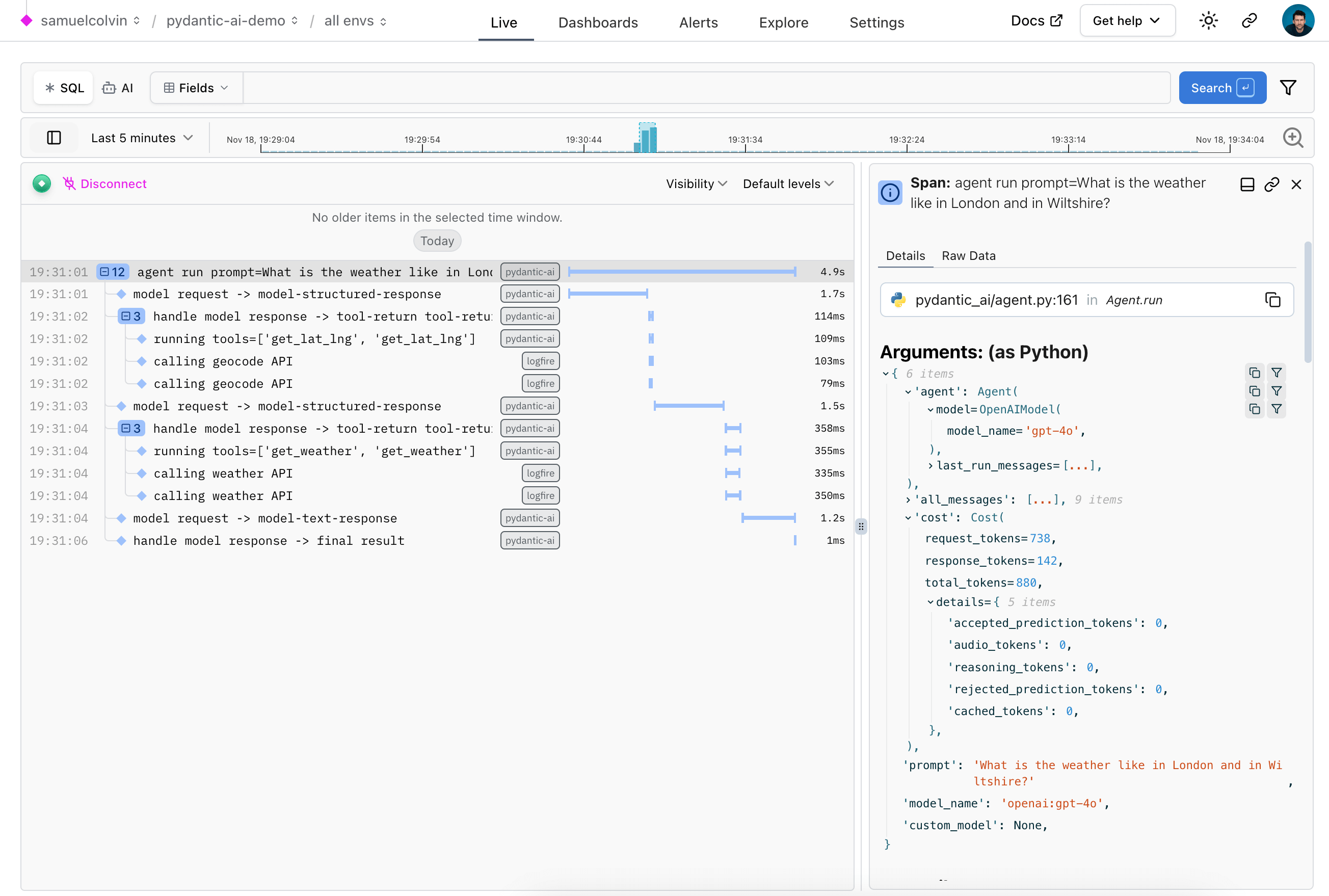

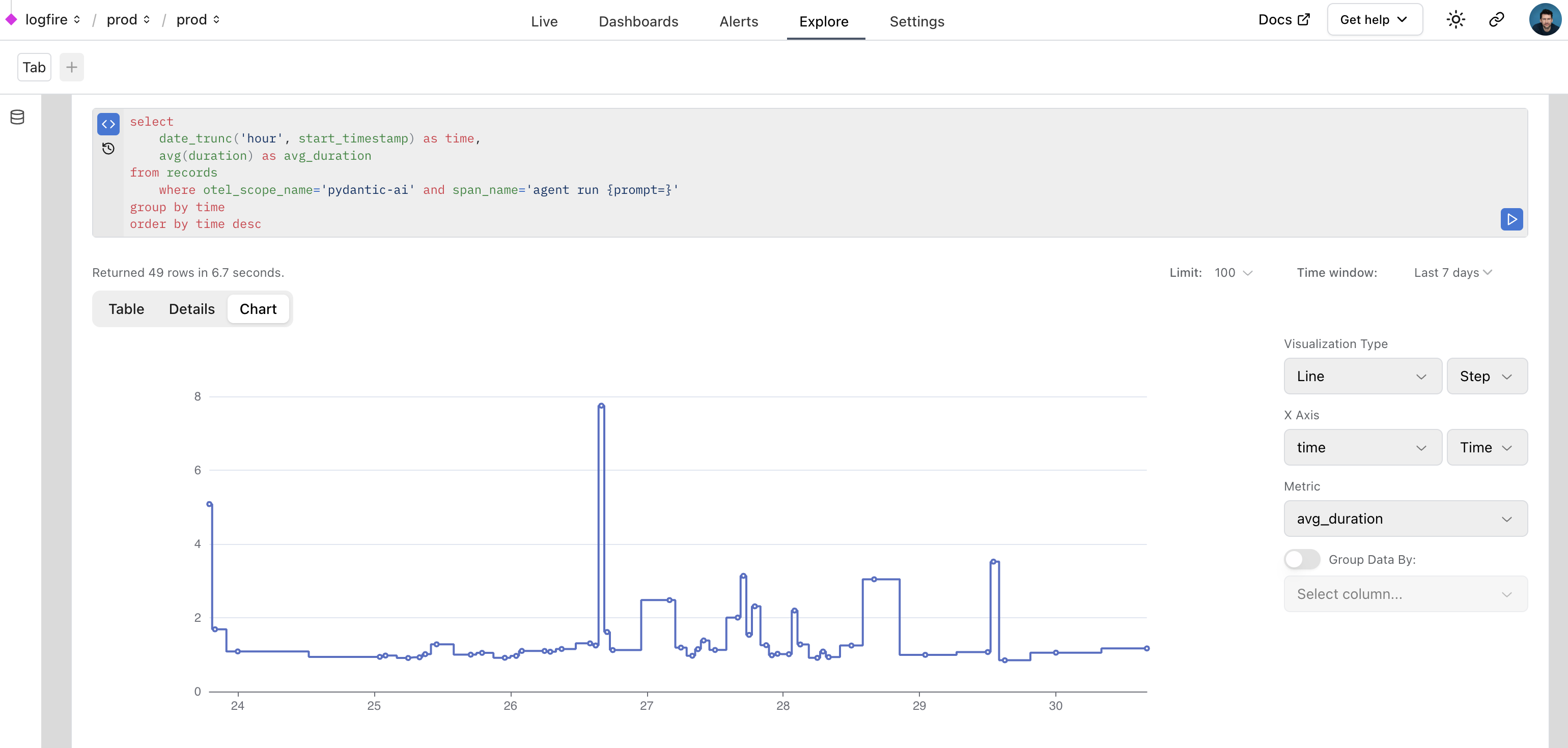

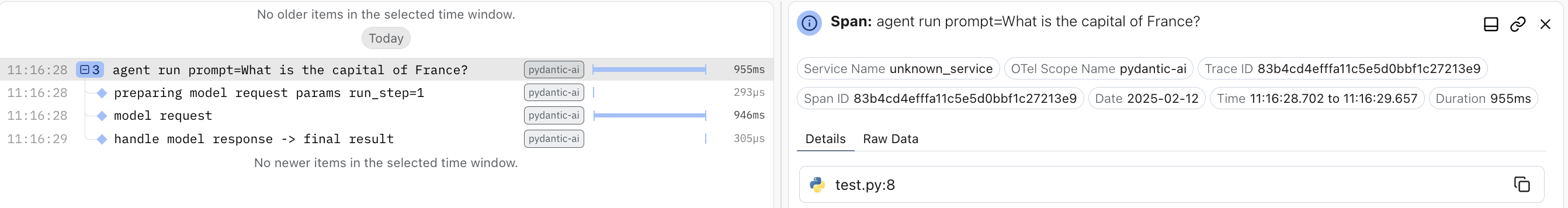

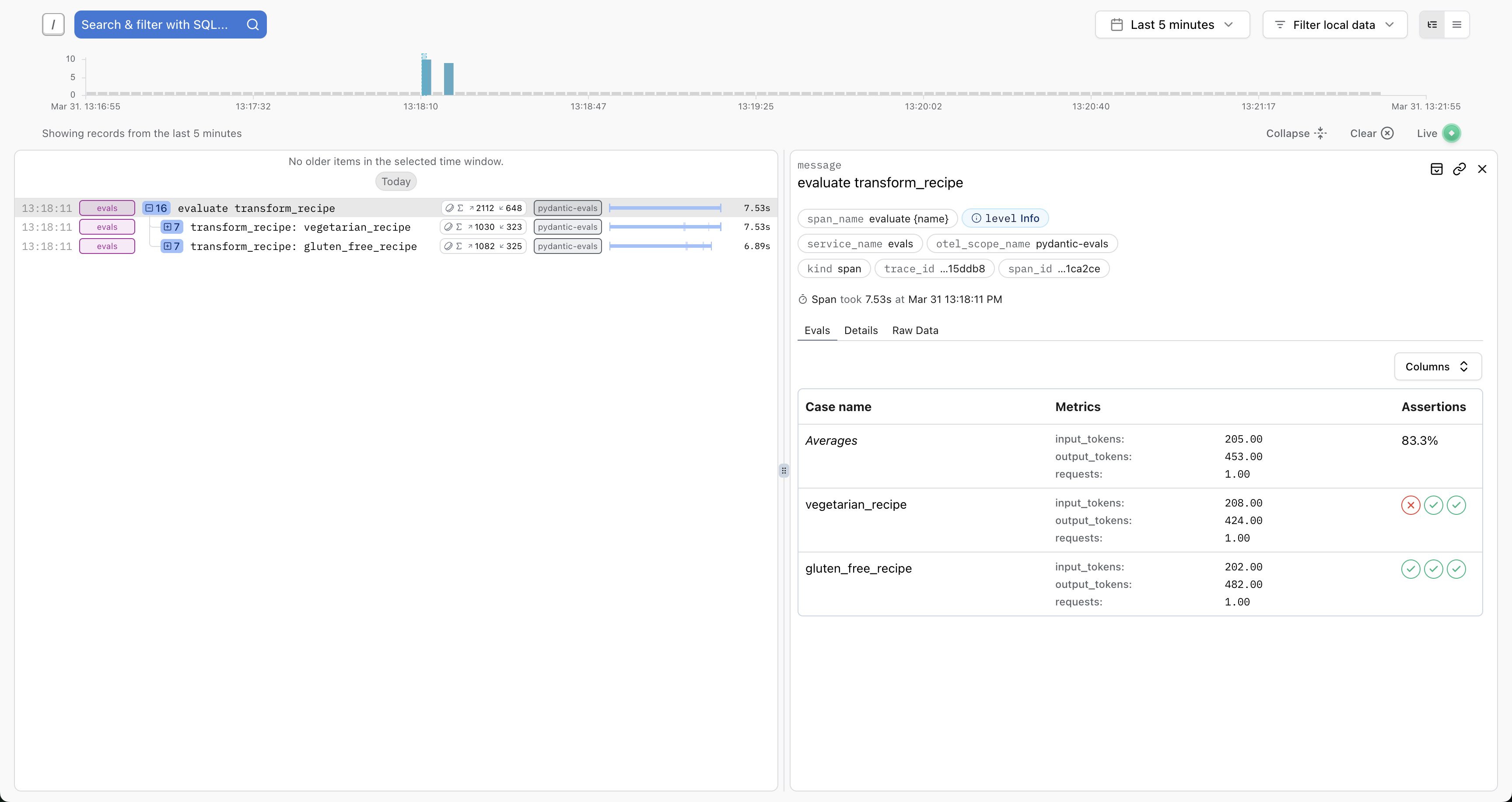

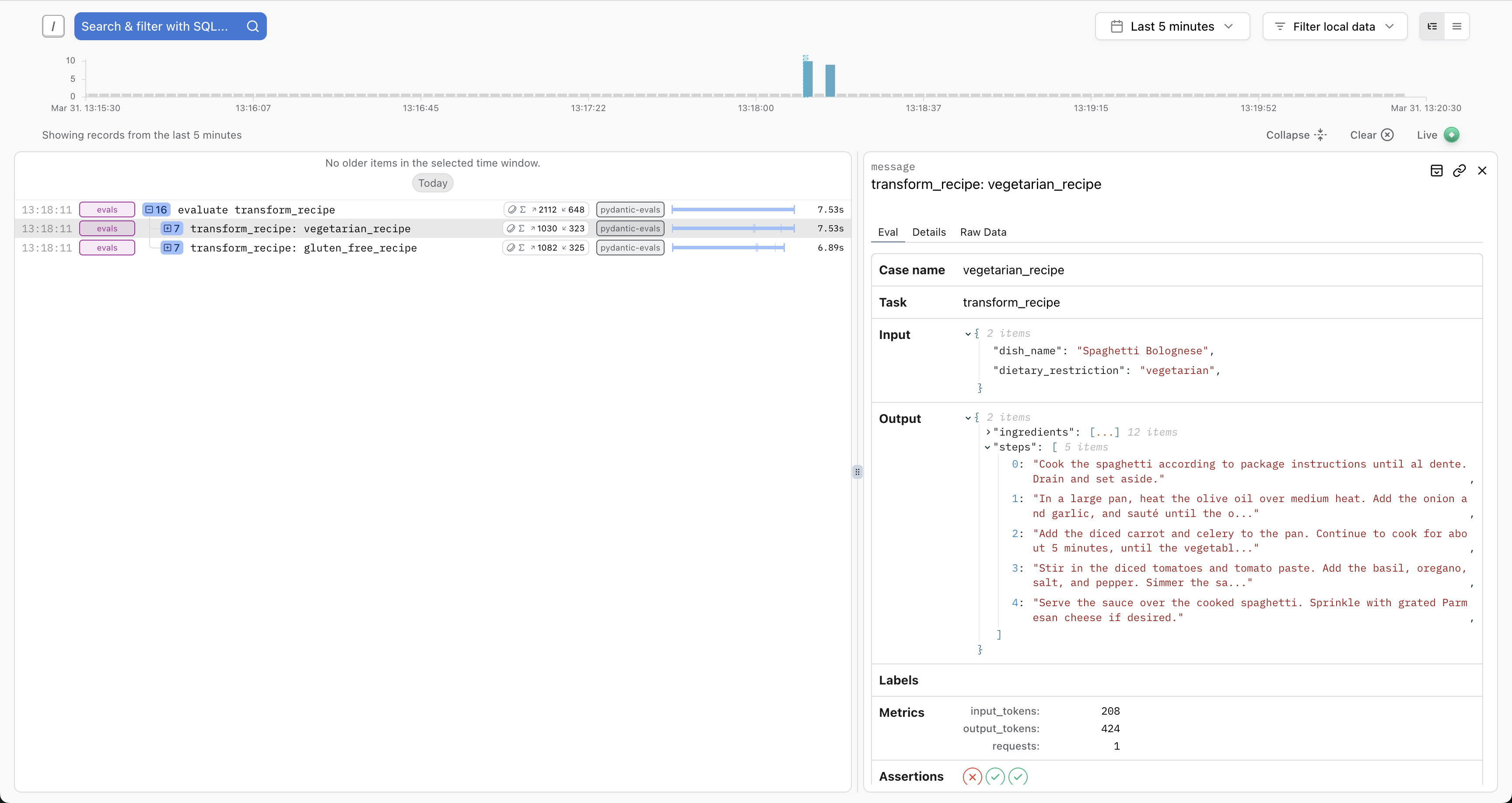

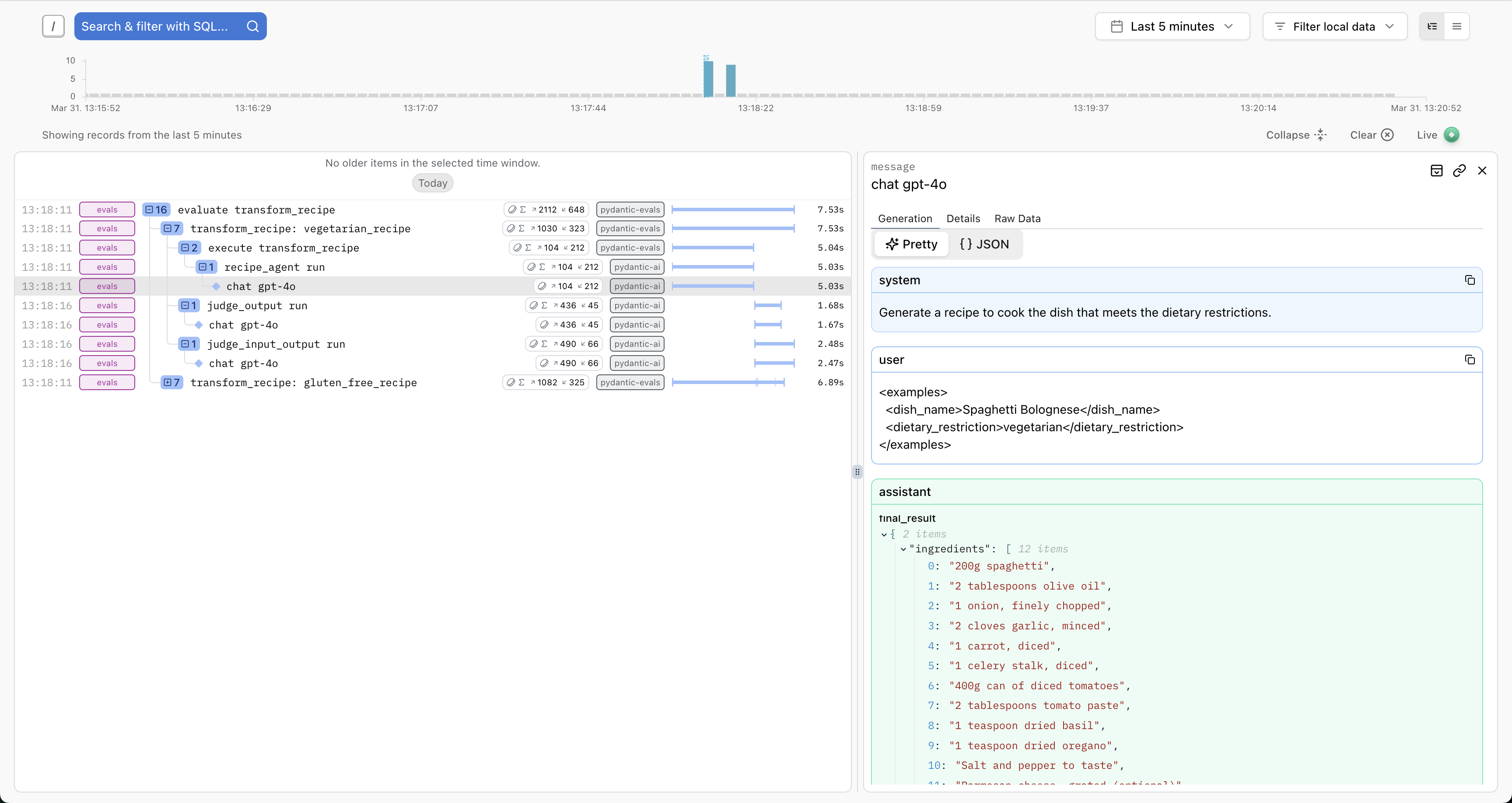

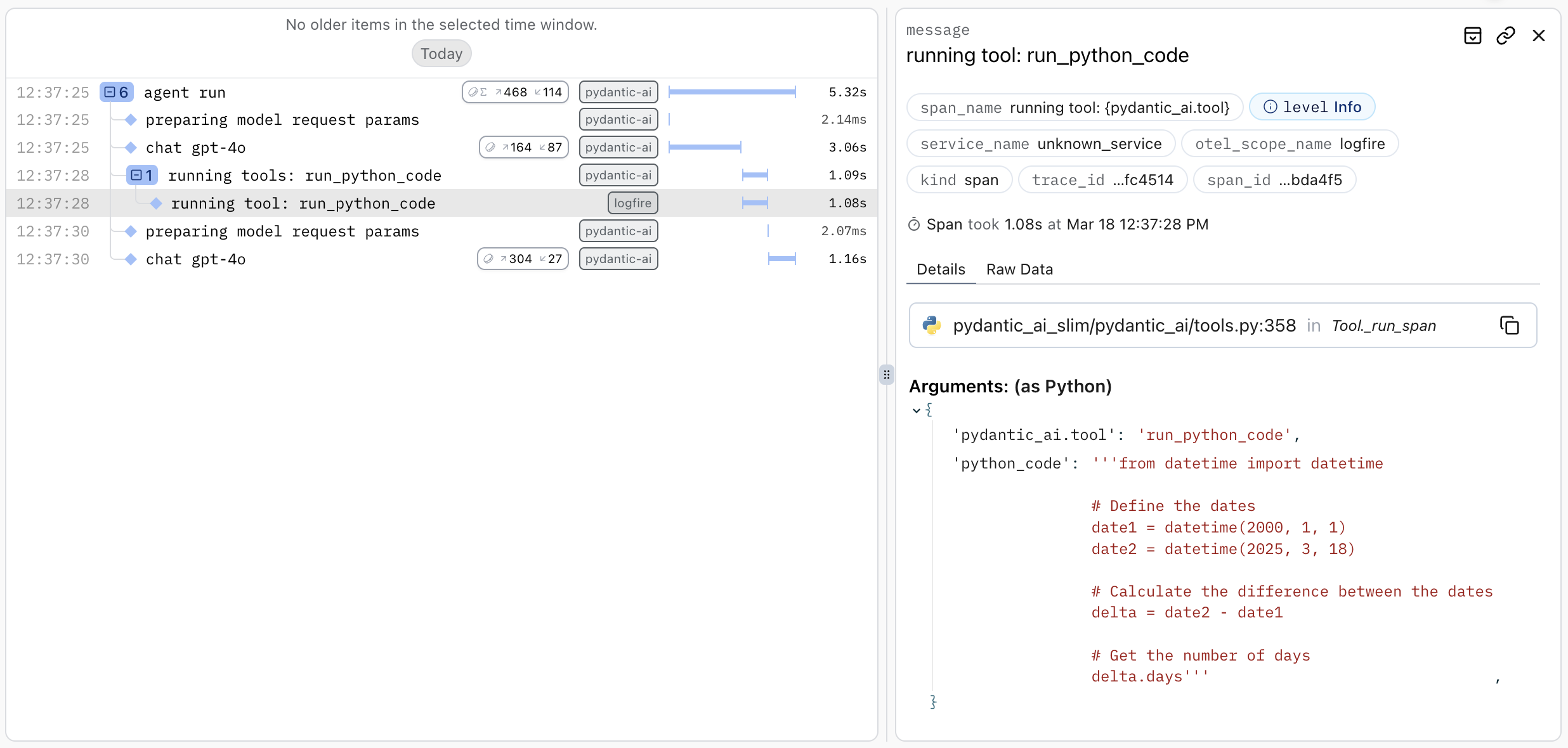

为了理解上述运行的流程,我们可以通过 Pydantic Logfire 观察代理的行为,这样可以使描述更加自然易懂。

要完成这项任务,我们需要先配置好 logfire,然后在代码中加入以下内容:

bank_support_with_logfire.py

...

from pydantic_ai import Agent, RunContext

from bank_database import DatabaseConn

import logfire

logfire.configure()

logfire.instrument_asyncpg()

...

support_agent = Agent(

'openai:gpt-4o',

deps_type=SupportDependencies,

result_type=SupportResult,

system_prompt=(

'You are a support agent in our bank, give the '

'customer support and judge the risk level of their query.'

),

instrument=True,

)

这足以让你一睹你的代理在行动中的风采:

查看监控和性能以获取更多信息。

下一步行动

尝试使用 PydanticAI,请按照示例中的说明进行操作。

阅读文档,了解如何使用 PydanticAI 构建应用程序的详细信息。

阅读 API 参考文档,以理解 PydanticAI 的接口使用方法。

安装指南

PydanticAI 可在 PyPI 上以 pydantic-ai 的形式获取,安装过程非常简便:

(需要 Python 3.9 及以上版本)

这将安装 pydantic_ai 包、核心依赖项以及使用 PydanticAI 中包含的所有模型所需的库。如果您只想使用某个特定模型,可以安装 PydanticAI 的“精简版”。

使用 Pydantic Logfire

PydanticAI 与 Pydantic Logfire 的集成非常出色(虽然这是完全可选的),能够帮助您查看并理解代理的运行情况。

使用 PydanticAI 与 Logfire 集成时,请安装 pydantic-ai 或 pydantic-ai-slim ,并选择包含 logfire 的可选组件:

pip install "pydantic-ai[logfire]"

uv add "pydantic-ai[logfire]"

请按照以下步骤进行配置,遵循 Logfire 的安装指南。

运行示例

我们将 pydantic_ai_examples 目录独立打包成 PyPI 包( pydantic-ai-examples ),这样做可以极大地方便用户进行自定义和运行示例。

安装示例时,请使用可选的 examples 组:

pip install "pydantic-ai[examples]"

uv add "pydantic-ai[examples]"

运行示例,请参照示例文档中的操作指南。

精简安装

如果您已知将使用哪个模型,并希望避免安装不必要的包,可以使用 pydantic-ai-slim 包。例如,仅使用 OpenAIModel 时,您将执行以下操作:

pip install "pydantic-ai-slim[openai]"

uv add "pydantic-ai-slim[openai]"

pydantic-ai-slim 包含以下可选分组:

logfire— 安装logfirePyPI(点击安装)evals— 安装pydantic-evalsPyPI(点击安装)openai— 安装openaiPyPI(点击安装)vertexai— 安装google-authPyPI ↗ 和requestsPyPI ↗(此处为初始翻译,已尽量保持原意,但根据中文习惯进行了调整)anthropic— 安装anthropicPyPI(点击安装)groq— 安装groqPyPI(点击安装)mistral— 安装mistralaiPyPI(点击安装)cohere- 安装依赖coherePyPI ↗duckduckgo- 安装依赖duckduckgo-searchPyPI ↗tavily- 安装依赖tavily-pythonPyPI ↗

请参阅模型文档,以获取有关每个模型所需可选依赖项的详细信息。

您可以为多种模型和场景安装依赖项,例如:

pip install "pydantic-ai-slim[openai,vertexai,logfire]"

uv add "pydantic-ai-slim[openai,vertexai,logfire]"

安装指南

PydanticAI 可在 PyPI 上以 pydantic-ai 的形式获取,安装过程非常简便:

(需要 Python 3.9 及以上版本)

这将安装 pydantic_ai 包、核心依赖项以及使用 PydanticAI 中包含的所有模型所需的库。如果您只想使用某个特定模型,可以安装 PydanticAI 的“精简版”。

使用 Pydantic Logfire

PydanticAI 与 Pydantic Logfire 的集成非常出色(虽然这是完全可选的),能够帮助您查看并理解代理的运行情况。

使用 PydanticAI 与 Logfire 集成时,请安装 pydantic-ai 或 pydantic-ai-slim ,并选择包含 logfire 的可选组件:

pip install "pydantic-ai[logfire]"

uv add "pydantic-ai[logfire]"

请按照以下步骤进行配置,遵循 Logfire 的安装指南。

运行示例

我们将 pydantic_ai_examples 目录独立打包成 PyPI 包( pydantic-ai-examples ),这样做可以极大地方便用户进行自定义和运行示例。

安装示例时,请使用可选的 examples 组:

pip install "pydantic-ai[examples]"

uv add "pydantic-ai[examples]"

运行示例,请参照示例文档中的操作指南。

精简安装

如果您已知将使用哪个模型,并希望避免安装不必要的包,可以使用 pydantic-ai-slim 包。例如,仅使用 OpenAIModel 时,您将执行以下操作:

pip install "pydantic-ai-slim[openai]"

uv add "pydantic-ai-slim[openai]"

pydantic-ai-slim 包含以下可选分组:

logfire— 安装logfirePyPI(点击安装)evals— 安装pydantic-evalsPyPI(点击安装)openai— 安装openaiPyPI(点击安装)vertexai— 安装google-authPyPI ↗ 和requestsPyPI ↗(此处为初始翻译,已尽量保持原意,但根据中文习惯进行了调整)anthropic— 安装anthropicPyPI(点击安装)groq— 安装groqPyPI(点击安装)mistral— 安装mistralaiPyPI(点击安装)cohere- 安装依赖coherePyPI ↗duckduckgo- 安装依赖duckduckgo-searchPyPI ↗tavily- 安装依赖tavily-pythonPyPI ↗

请参阅模型文档,以获取有关每个模型所需可选依赖项的详细信息。

您可以为多种模型和场景安装依赖项,例如:

pip install "pydantic-ai-slim[openai,vertexai,logfire]"

uv add "pydantic-ai-slim[openai,vertexai,logfire]"

获取帮助

如果您需要帮助入门 PydanticAI 或进行高级操作,以下资源可能对您有所帮助。

Slack

加入 Pydantic Slack 的 [#pydantic-ai](https://logfire.pydantic.dev/docs/join-slack/) 频道,您可以在那里提问、寻求帮助,并与其他人交流 PydanticAI 相关的话题。同时,该平台还设有 Pydantic、Logfire 和 FastUI 的专属频道。

如果您使用的是 Logfire Pro 计划,您还可以获得一个专属的私有 Slack 协作频道。这样可以让沟通更加高效和私密。

GitHub 问题

PydanticAI 的 GitHub 仓库问题区是一个极佳的提问和反馈平台。

贡献

我们热切期待您的贡献,为 PydanticAI 添砖加瓦!

安装与配置

克隆您的分支后,请切换到仓库目录下

git clone git@github.com:<your username>/pydantic-ai.git

cd pydantic-ai

安装 uv (版本 0.4.30 或更新版本)及 pre-commit

我们在这里使用 pipx,如需了解其他选项,请参阅:

要了解 pipx 的具体内容,请参考以下文档

pipx install uv pre-commit

安装所有依赖项和 pre-commit 钩子( pydantic-ai )

运行测试等相关操作

我们使用 make 来管理您需要执行的大多数命令。

查看可用命令的详细信息,请执行以下命令:

运行代码格式化、代码检查、静态类型检查和测试,并生成覆盖率报告,请按照以下步骤操作:

文档修改

在本地运行文档页面,请按照以下步骤操作:

添加新模型到 PydanticAI 的规则说明

为了避免给 PydanticAI 的维护者带来过大的工作压力,我们无法接受所有模型的贡献。因此,我们制定了以下规则,用于明确何时接纳新模型,何时不予接纳。这样做有望降低失望和无效工作的风险。

- 添加新模型并引入额外依赖项时,该依赖项需在 PyPI 上连续 3 个月每月下载量超过 50 万次

- 添加一个新模型,该模型内部采用其他模型的逻辑,且不依赖额外库,其 GitHub 组织需拥有超过 20k 的总星标数

- 对于任何其他模型,我们乐意添加一段描述,并提供包含链接和使用说明的自定义 URL 及 API 密钥即可

- 对于需要更多逻辑的其他模型,我们建议您发布自己的 Python 包。该包应依赖于

pydantic-ai-slim并实现一个继承自我们提供的ModelABC 的模型。

如果您不确定是否添加模型,请提交一个相关的问题。

故障排除指南

以下是一些关于如何解决您在使用 PydanticAI 时可能遇到的一些常见问题的建议。如果您遇到的问题未在此列出或文档中未提及,欢迎在 Pydantic Slack上咨询或直接在 GitHub上提交问题。

Jupyter Notebook 错误问题

RuntimeError: This event loop is already running

该错误是由于 Jupyter 笔记本的事件循环与 PydanticAI 存在冲突造成的。解决这一冲突的方法之一是使用 nest-asyncio 。具体来说,在运行任何代理之前,请先执行以下步骤:

import nest_asyncio

nest_asyncio.apply()

注意:此修复同样适用于 Google Colab。

API 密钥配置

UserError: API key must be provided or set in the [MODEL]_API_KEY environment variable

如果您在设置模型 API 密钥时遇到问题,请访问“模型”页面,学习如何设置环境变量或传递参数的详细步骤。

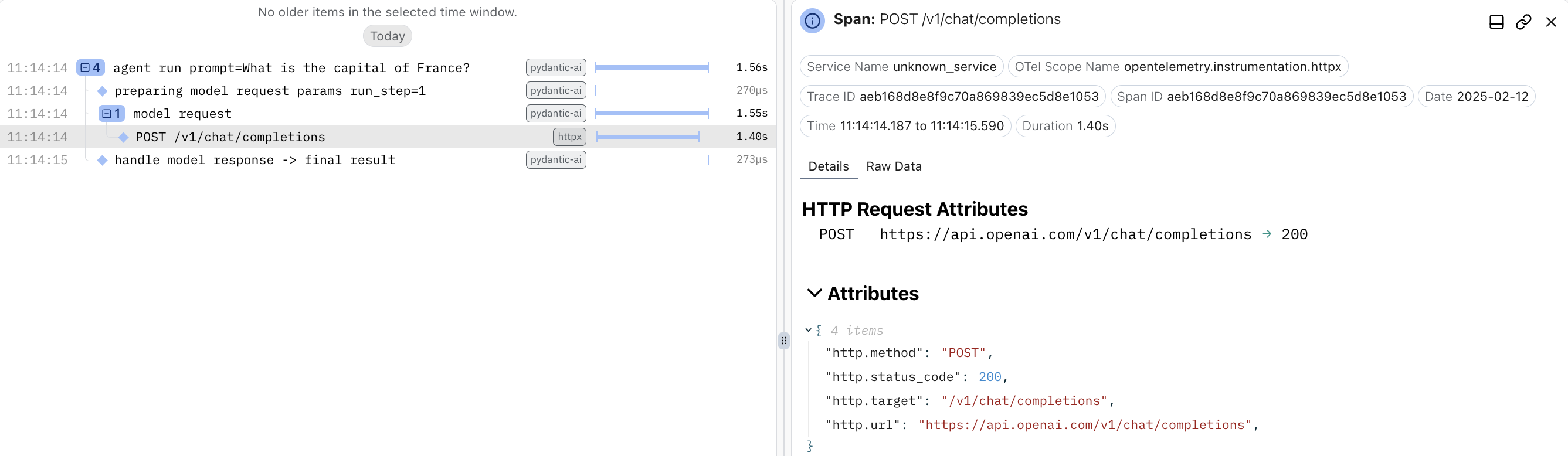

监控 HTTPX 请求情况

您可以在模型中使用自定义客户端来在运行时访问特定的请求、响应和头部信息,这样表达更为自然易懂。

使用 logfire 的 HTTPX 集成来监控上述内容特别有用。

文档

代理(Agents)

简介

代理是 PydanticAI 与 LLMs 交互的核心途径。

在某些情况下,一个代理可以控制整个应用程序或组件,而多个代理之间的交互则可以体现更为复杂的流程,使描述更加自然易懂。

Agent 类提供了完整的 API 文档,从本质上讲,您可以将代理视为一个用于容纳的容器:

| 组件 | 描述 |

|---|---|

| 系统提示信息System prompt(s) | 开发者为LLM编写的指令集,内容如下: |

| 函数工具Function tool(s) | LLM 在生成响应过程中可能调用的函数,用于获取相关信息。 |

| 结构化结果类型Structured result type | 结构化数据类型,LLM必须在运行结束时返回(如果已指定)。 |

| 依赖类型约束Dependency type constraint | 系统提示功能、工具以及结果验证器在执行时可能会调用依赖库。这样的表述更加自然,易于理解。 |

| LLM 模型LLM model | 可选默认值 LLM 与代理关联的模型。运行代理时也可指定。 |

| 模型配置Model Settings | 可选的默认模型设置,有助于优化请求。运行代理时也可指定。 |

在类型中,代理在依赖和结果类型上具有通用性,例如,一个需要类型 Foobar 的依赖和返回类型 list[str] 的结果的代理将具有类型 Agent[Foobar, list[str]] 。实际上,你无需过多关注这一点,这仅仅意味着你的 IDE 能够告诉你何时使用了正确的类型,并且如果你选择使用静态类型检查,它应该能够与 PydanticAI 良好地协同工作。

这里是一个模拟轮盘赌的代理的示例:

roulette_wheel.py

from pydantic_ai import Agent, RunContext

roulette_agent = Agent(

'openai:gpt-4o',

deps_type=int,

result_type=bool,

system_prompt=(

'Use the `roulette_wheel` function to see if the '

'customer has won based on the number they provide.'

),

)

@roulette_agent.tool

async def roulette_wheel(ctx: RunContext[int], square: int) -> str:

"""check if the square is a winner"""

return 'winner' if square == ctx.deps else 'loser'

# Run the agent

success_number = 18

result = roulette_agent.run_sync('Put my money on square eighteen', deps=success_number)

print(result.data)

#> True

result = roulette_agent.run_sync('I bet five is the winner', deps=success_number)

print(result.data)

#> False

代理设计旨在实现重用,类似于 FastAPI 应用

代理对象旨在一次性创建(通常作为模块的全局变量)并在整个应用程序中重复使用,这就像一个小型的 FastAPI 应用或 APIRouter 一样。

运行中的代理

运行代理有四种方法:

agent.run()— 一个返回包含完成响应的RunResult的协程。 (更自然地表达)agent.run_sync()— 这是一个返回包含完成响应的RunResult的普通同步函数(实际上内部只是调用了loop.run_until_complete(self.run()))。agent.run_stream()— 这是一个协程,返回一个StreamedRunResult,其中包含用于将响应作为异步可迭代对象流式传输的方法。agent.iter()— 这是一个上下文管理器,用于返回一个AgentRun对象,该对象是一个异步可迭代器,可以遍历代理底层的Graph节点。

这里是一个展示前三个简单例子的例子:

run_agent.py

from pydantic_ai import Agent

agent = Agent('openai:gpt-4o')

result_sync = agent.run_sync('What is the capital of Italy?')

print(result_sync.data)

#> Rome

async def main():

result = await agent.run('What is the capital of France?')

print(result.data)

#> Paris

async with agent.run_stream('What is the capital of the UK?') as response:

print(await response.get_data())

#> London

(本示例完整,可直接运行——运行时需添加 asyncio.run(main()) 才能执行 main )

您可以将之前运行的消息传递过来,以继续对话或提供上下文。具体信息请参考“消息和聊天历史”部分。

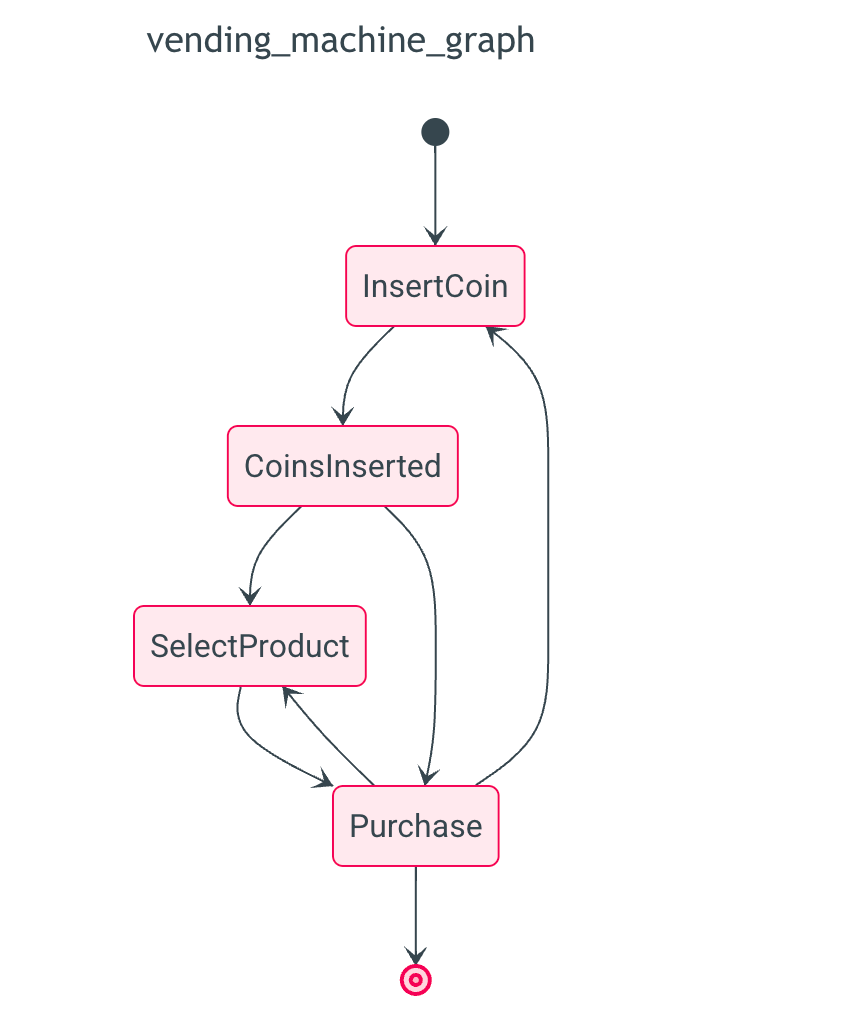

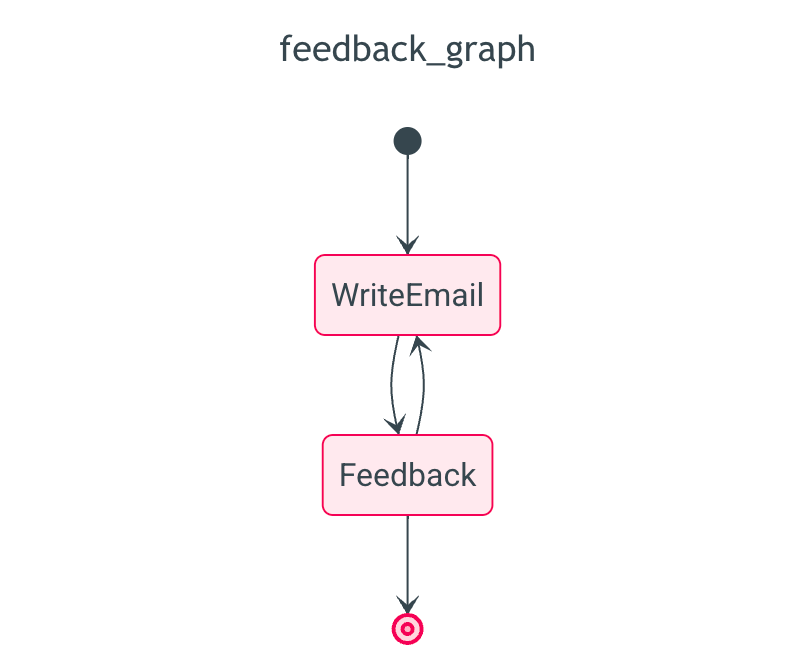

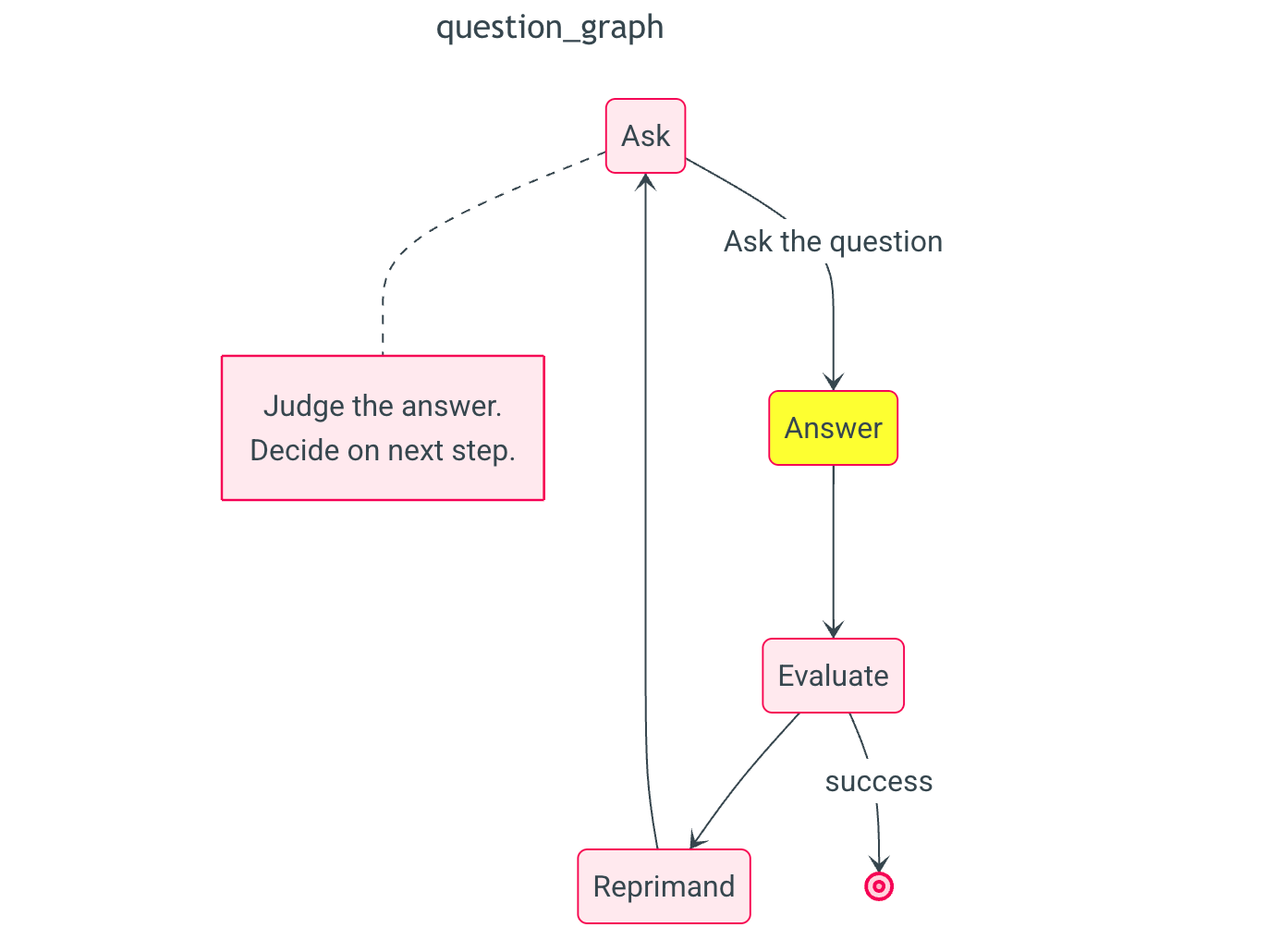

遍历代理的图

在 PydanticAI 中,每个 Agent 都利用 pydantic-graph 来管理其执行流程。pydantic-graph 是一个通用的、以类型为核心的库,用于在 Python 中构建和运行有限状态机。它并不直接依赖于 PydanticAI——您可以独立于 GenAI 使用它来处理与工作流程无关的任务——但 PydanticAI 利用它来协调模型请求和响应的处理过程,使代理的运行更加高效。

在许多情况下,您根本无需关心 pydantic-graph;直接调用 agent.run(...) 即可从始至终遍历底层图。然而,如果您需要更深入的洞察或控制——比如记录每个工具的调用,或在特定阶段加入自己的逻辑——PydanticAI 通过 Agent.iter 提供了底层的迭代过程。该方法返回一个 AgentRun ,您可以异步迭代,或者通过 next 方法逐节点手动遍历。一旦代理的图返回 End ,您将得到最终结果,以及所有步骤的详细历史记录。

async for 迭代

这里是一个使用 async for 和 iter 记录代理执行每个节点的例子:

agent_iter_async_for.py

from pydantic_ai import Agent

agent = Agent('openai:gpt-4o')

async def main():

nodes = []

# Begin an AgentRun, which is an async-iterable over the nodes of the agent's graph

async with agent.iter('What is the capital of France?') as agent_run:

async for node in agent_run:

# Each node represents a step in the agent's execution

nodes.append(node)

print(nodes)

"""

[

ModelRequestNode(

request=ModelRequest(

parts=[

UserPromptPart(

content='What is the capital of France?',

timestamp=datetime.datetime(...),

part_kind='user-prompt',

)

],

kind='request',

)

),

CallToolsNode(

model_response=ModelResponse(

parts=[TextPart(content='Paris', part_kind='text')],

model_name='gpt-4o',

timestamp=datetime.datetime(...),

kind='response',

)

),

End(data=FinalResult(data='Paris', tool_name=None, tool_call_id=None)),

]

"""

print(agent_run.result.data)

#> Paris

- “

AgentRun是一个异步迭代器,它会逐个输出流程中的每个节点(BaseNode或End)。这样的表述更加自然和易于理解。” - 运行结束时,会返回一个

End节点。

使用 .next(...) 手动进行操作

您可以通过将您希望运行的下一个节点传递给 AgentRun.next(...) 方法来手动控制迭代过程。这样,您可以在节点执行前对其进行检查或修改,根据您自己的逻辑跳过某些节点,并且更容易地捕捉到错误:

agent_iter_next.py

from pydantic_ai import Agent

from pydantic_graph import End

agent = Agent('openai:gpt-4o')

async def main():

async with agent.iter('What is the capital of France?') as agent_run:

node = agent_run.next_node

all_nodes = [node]

# Drive the iteration manually:

while not isinstance(node, End):

node = await agent_run.next(node)

all_nodes.append(node)

print(all_nodes)

"""

[

UserPromptNode(

user_prompt='What is the capital of France?',

system_prompts=(),

system_prompt_functions=[],

system_prompt_dynamic_functions={},

),

ModelRequestNode(

request=ModelRequest(

parts=[

UserPromptPart(

content='What is the capital of France?',

timestamp=datetime.datetime(...),

part_kind='user-prompt',

)

],

kind='request',

)

),

CallToolsNode(

model_response=ModelResponse(

parts=[TextPart(content='Paris', part_kind='text')],

model_name='gpt-4o',

timestamp=datetime.datetime(...),

kind='response',

)

),

End(data=FinalResult(data='Paris', tool_name=None, tool_call_id=None)),

]

"""

查看使用情况和最终结果

您可以随时通过 AgentRun 对象和 agent_run.usage() 获取使用统计信息(如令牌、请求等)。该方法将返回一个包含使用数据的 Usage 对象。

运行结束后, agent_run.final_result 将变为一个包含最终输出及相关元数据的 AgentRunResult 对象。

流媒体

这里是一个示例,展示了如何结合迭代 0#进行代理运行的流式传输

streaming.py

import asyncio

from dataclasses import dataclass

from datetime import date

from pydantic_ai import Agent

from pydantic_ai.messages import (

FinalResultEvent,

FunctionToolCallEvent,

FunctionToolResultEvent,

PartDeltaEvent,

PartStartEvent,

TextPartDelta,

ToolCallPartDelta,

)

from pydantic_ai.tools import RunContext

@dataclass

class WeatherService:

async def get_forecast(self, location: str, forecast_date: date) -> str:

# In real code: call weather API, DB queries, etc.

return f'The forecast in {location} on {forecast_date} is 24°C and sunny.'

async def get_historic_weather(self, location: str, forecast_date: date) -> str:

# In real code: call a historical weather API or DB

return (

f'The weather in {location} on {forecast_date} was 18°C and partly cloudy.'

)

weather_agent = Agent[WeatherService, str](

'openai:gpt-4o',

deps_type=WeatherService,

result_type=str, # We'll produce a final answer as plain text

system_prompt='Providing a weather forecast at the locations the user provides.',

)

@weather_agent.tool

async def weather_forecast(

ctx: RunContext[WeatherService],

location: str,

forecast_date: date,

) -> str:

if forecast_date >= date.today():

return await ctx.deps.get_forecast(location, forecast_date)

else:

return await ctx.deps.get_historic_weather(location, forecast_date)

output_messages: list[str] = []

async def main():

user_prompt = 'What will the weather be like in Paris on Tuesday?'

# Begin a node-by-node, streaming iteration

async with weather_agent.iter(user_prompt, deps=WeatherService()) as run:

async for node in run:

if Agent.is_user_prompt_node(node):

# A user prompt node => The user has provided input

output_messages.append(f'=== UserPromptNode: {node.user_prompt} ===')

elif Agent.is_model_request_node(node):

# A model request node => We can stream tokens from the model's request

output_messages.append(

'=== ModelRequestNode: streaming partial request tokens ==='

)

async with node.stream(run.ctx) as request_stream:

async for event in request_stream:

if isinstance(event, PartStartEvent):

output_messages.append(

f'[Request] Starting part {event.index}: {event.part!r}'

)

elif isinstance(event, PartDeltaEvent):

if isinstance(event.delta, TextPartDelta):

output_messages.append(

f'[Request] Part {event.index} text delta: {event.delta.content_delta!r}'

)

elif isinstance(event.delta, ToolCallPartDelta):

output_messages.append(

f'[Request] Part {event.index} args_delta={event.delta.args_delta}'

)

elif isinstance(event, FinalResultEvent):

output_messages.append(

f'[Result] The model produced a final result (tool_name={event.tool_name})'

)

elif Agent.is_call_tools_node(node):

# A handle-response node => The model returned some data, potentially calls a tool

output_messages.append(

'=== CallToolsNode: streaming partial response & tool usage ==='

)

async with node.stream(run.ctx) as handle_stream:

async for event in handle_stream:

if isinstance(event, FunctionToolCallEvent):

output_messages.append(

f'[Tools] The LLM calls tool={event.part.tool_name!r} with args={event.part.args} (tool_call_id={event.part.tool_call_id!r})'

)

elif isinstance(event, FunctionToolResultEvent):

output_messages.append(

f'[Tools] Tool call {event.tool_call_id!r} returned => {event.result.content}'

)

elif Agent.is_end_node(node):

assert run.result.data == node.data.data

# Once an End node is reached, the agent run is complete

output_messages.append(f'=== Final Agent Output: {run.result.data} ===')

if __name__ == '__main__':

asyncio.run(main())

print(output_messages)

"""

[

'=== ModelRequestNode: streaming partial request tokens ===',

'[Request] Starting part 0: ToolCallPart(tool_name=\'weather_forecast\', args=\'{"location":"Pa\', tool_call_id=\'0001\', part_kind=\'tool-call\')',

'[Request] Part 0 args_delta=ris","forecast_',

'[Request] Part 0 args_delta=date":"2030-01-',

'[Request] Part 0 args_delta=01"}',

'=== CallToolsNode: streaming partial response & tool usage ===',

'[Tools] The LLM calls tool=\'weather_forecast\' with args={"location":"Paris","forecast_date":"2030-01-01"} (tool_call_id=\'0001\')',

"[Tools] Tool call '0001' returned => The forecast in Paris on 2030-01-01 is 24°C and sunny.",

'=== ModelRequestNode: streaming partial request tokens ===',

"[Request] Starting part 0: TextPart(content='It will be ', part_kind='text')",

'[Result] The model produced a final result (tool_name=None)',

"[Request] Part 0 text delta: 'warm and sunny '",

"[Request] Part 0 text delta: 'in Paris on '",

"[Request] Part 0 text delta: 'Tuesday.'",

'=== CallToolsNode: streaming partial response & tool usage ===',

'=== Final Agent Output: It will be warm and sunny in Paris on Tuesday. ===',

]

"""

额外配置

使用限制说明

PydanticAI 提供了一种 UsageLimits 结构,以便帮助您在模型运行过程中限制令牌和/或请求的使用,使表述更加自然易懂。

您可以通过传入 usage_limits 参数给 run{_sync,_stream} 函数来应用这些设置,表达更为自然。

考虑以下示例,我们在这里限制了响应令牌的数量:

from pydantic_ai import Agent

from pydantic_ai.exceptions import UsageLimitExceeded

from pydantic_ai.usage import UsageLimits

agent = Agent('anthropic:claude-3-5-sonnet-latest')

result_sync = agent.run_sync(

'What is the capital of Italy? Answer with just the city.',

usage_limits=UsageLimits(response_tokens_limit=10),

)

print(result_sync.data)

#> Rome

print(result_sync.usage())

"""

Usage(requests=1, request_tokens=62, response_tokens=1, total_tokens=63, details=None)

"""

try:

result_sync = agent.run_sync(

'What is the capital of Italy? Answer with a paragraph.',

usage_limits=UsageLimits(response_tokens_limit=10),

)

except UsageLimitExceeded as e:

print(e)

#> Exceeded the response_tokens_limit of 10 (response_tokens=32)

限制请求数量有助于防止无限循环或过度调用工具,这样做很有用。

from typing_extensions import TypedDict

from pydantic_ai import Agent, ModelRetry

from pydantic_ai.exceptions import UsageLimitExceeded

from pydantic_ai.usage import UsageLimits

class NeverResultType(TypedDict):

"""

Never ever coerce data to this type.

"""

never_use_this: str

agent = Agent(

'anthropic:claude-3-5-sonnet-latest',

retries=3,

result_type=NeverResultType,

system_prompt='Any time you get a response, call the `infinite_retry_tool` to produce another response.',

)

@agent.tool_plain(retries=5)

def infinite_retry_tool() -> int:

raise ModelRetry('Please try again.')

try:

result_sync = agent.run_sync(

'Begin infinite retry loop!', usage_limits=UsageLimits(request_limit=3)

)

except UsageLimitExceeded as e:

print(e)

#> The next request would exceed the request_limit of 3

备注

尤其是在您注册了众多工具时,使用 request_limit 可以防止模型过度循环调用这些工具。

模型(运行)设置

PydanticAI 提供了一种结构化的 settings.ModelSettings 请求方式,帮助您调整请求细节。通过这种方式,您可以配置诸如 temperature , max_tokens , timeout 等影响模型行为的常用参数,从而实现更精细的模型控制。

有两种方法来应用这些设置:1. 通过 run{_sync,_stream} 函数的 model_settings 参数传递。这允许根据每个请求进行细致调整。2. 在 Agent 初始化过程中通过 model_settings 参数设置。这些设置将默认应用于所有后续使用该代理的运行调用。然而,在特定运行调用期间提供的 model_settings 将覆盖代理的默认配置。

例如,如果您希望将 temperature 的设置调整为 0.0 以减少随机性,可以按照以下步骤进行操作:

from pydantic_ai import Agent

agent = Agent('openai:gpt-4o')

result_sync = agent.run_sync(

'What is the capital of Italy?', model_settings={'temperature': 0.0}

)

print(result_sync.data)

#> Rome

Model specific settings

If you wish to further customize model behavior, you can use a subclass of ModelSettings, like GeminiModelSettings, associated with your model of choice.

For example:

from pydantic_ai import Agent, UnexpectedModelBehavior

from pydantic_ai.models.gemini import GeminiModelSettings

agent = Agent('google-gla:gemini-1.5-flash')

try:

result = agent.run_sync(

'Write a list of 5 very rude things that I might say to the universe after stubbing my toe in the dark:',

model_settings=GeminiModelSettings(

temperature=0.0, # general model settings can also be specified

gemini_safety_settings=[

{

'category': 'HARM_CATEGORY_HARASSMENT',

'threshold': 'BLOCK_LOW_AND_ABOVE',

},

{

'category': 'HARM_CATEGORY_HATE_SPEECH',

'threshold': 'BLOCK_LOW_AND_ABOVE',

},

],

),

)

except UnexpectedModelBehavior as e:

print(e)

"""

Safety settings triggered, body:

<safety settings details>

"""

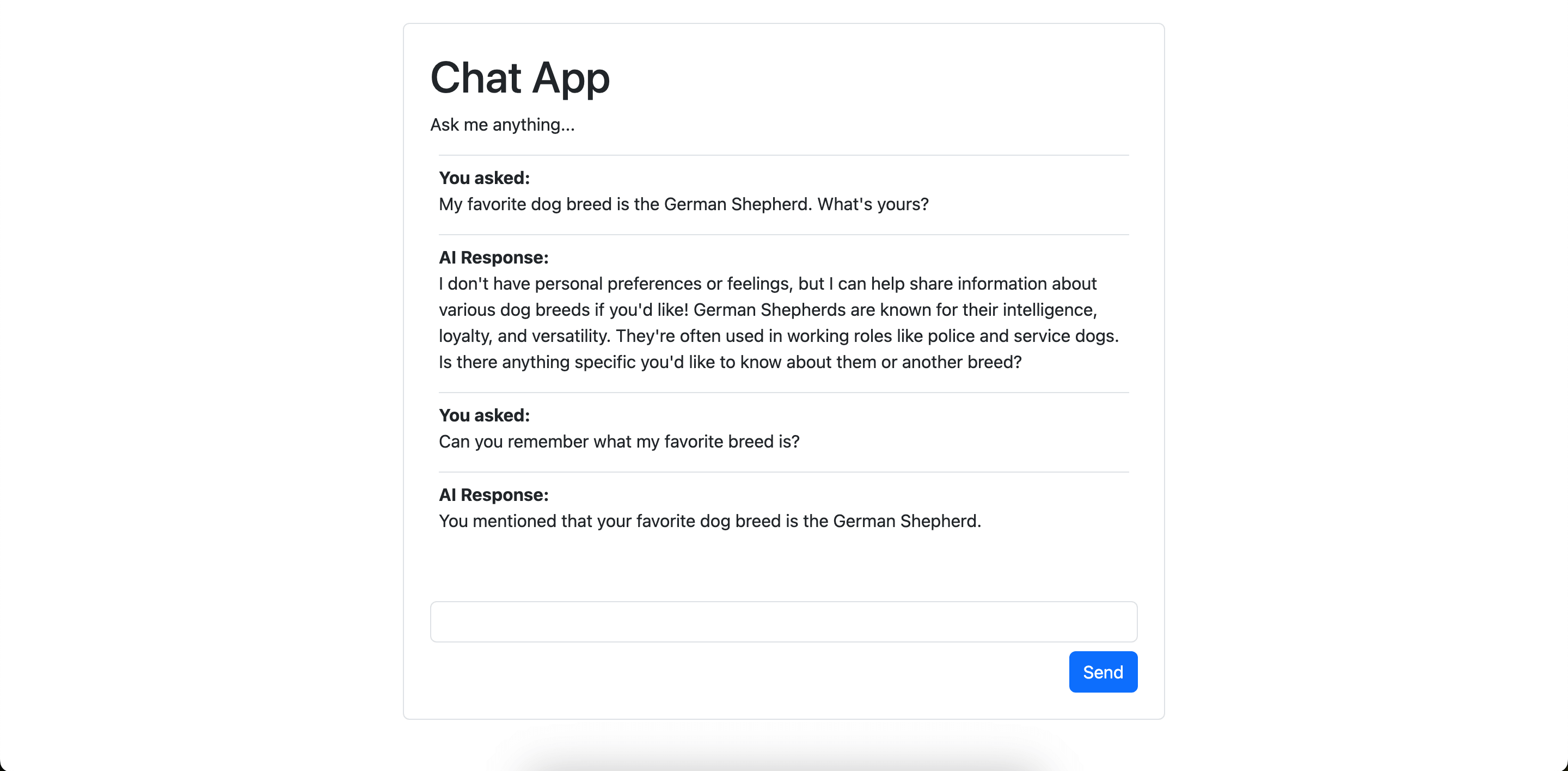

Runs vs. Conversations

An agent run might represent an entire conversation — there's no limit to how many messages can be exchanged in a single run. However, a conversation might also be composed of multiple runs, especially if you need to maintain state between separate interactions or API calls.

Here's an example of a conversation comprised of multiple runs:

conversation_example.py

from pydantic_ai import Agent

agent = Agent('openai:gpt-4o')

# First run

result1 = agent.run_sync('Who was Albert Einstein?')

print(result1.data)

#> Albert Einstein was a German-born theoretical physicist.

# Second run, passing previous messages

result2 = agent.run_sync(

'What was his most famous equation?',

message_history=result1.new_messages(),

)

print(result2.data)

#> Albert Einstein's most famous equation is (E = mc^2).

(This example is complete, it can be run "as is")

Type safe by design

PydanticAI is designed to work well with static type checkers, like mypy and pyright.

Typing is (somewhat) optional

PydanticAI is designed to make type checking as useful as possible for you if you choose to use it, but you don't have to use types everywhere all the time.

That said, because PydanticAI uses Pydantic, and Pydantic uses type hints as the definition for schema and validation, some types (specifically type hints on parameters to tools, and the result_type arguments to Agent) are used at runtime.

We (the library developers) have messed up if type hints are confusing you more than helping you, if you find this, please create an issue explaining what's annoying you!

In particular, agents are generic in both the type of their dependencies and the type of results they return, so you can use the type hints to ensure you're using the right types.

Consider the following script with type mistakes:

type_mistakes.py

from dataclasses import dataclass

from pydantic_ai import Agent, RunContext

@dataclass

class User:

name: str

agent = Agent(

'test',

deps_type=User,

result_type=bool,

)

@agent.system_prompt

def add_user_name(ctx: RunContext[str]) -> str:

return f"The user's name is {ctx.deps}."

def foobar(x: bytes) -> None:

pass

result = agent.run_sync('Does their name start with "A"?', deps=User('Anne'))

foobar(result.data)

Running mypy on this will give the following output:

➤ uv run mypy type_mistakes.py

type_mistakes.py:18: error: Argument 1 to "system_prompt" of "Agent" has incompatible type "Callable[[RunContext[str]], str]"; expected "Callable[[RunContext[User]], str]" [arg-type]

type_mistakes.py:28: error: Argument 1 to "foobar" has incompatible type "bool"; expected "bytes" [arg-type]

Found 2 errors in 1 file (checked 1 source file)

Running pyright would identify the same issues.

System Prompts

System prompts might seem simple at first glance since they're just strings (or sequences of strings that are concatenated), but crafting the right system prompt is key to getting the model to behave as you want.

Generally, system prompts fall into two categories:

- Static system prompts: These are known when writing the code and can be defined via the

system_promptparameter of theAgentconstructor. - Dynamic system prompts: These depend in some way on context that isn't known until runtime, and should be defined via functions decorated with

@agent.system_prompt.

You can add both to a single agent; they're appended in the order they're defined at runtime.

Here's an example using both types of system prompts:

system_prompts.py

from datetime import date

from pydantic_ai import Agent, RunContext

agent = Agent(

'openai:gpt-4o',

deps_type=str,

system_prompt="Use the customer's name while replying to them.",

)

@agent.system_prompt

def add_the_users_name(ctx: RunContext[str]) -> str:

return f"The user's name is {ctx.deps}."

@agent.system_prompt

def add_the_date() -> str:

return f'The date is {date.today()}.'

result = agent.run_sync('What is the date?', deps='Frank')

print(result.data)

#> Hello Frank, the date today is 2032-01-02.

(This example is complete, it can be run "as is")

Reflection and self-correction

Validation errors from both function tool parameter validation and structured result validation can be passed back to the model with a request to retry.

You can also raise ModelRetry from within a tool or result validator function to tell the model it should retry generating a response.

- The default retry count is 1 but can be altered for the entire agent, a specific tool, or a result validator.

- You can access the current retry count from within a tool or result validator via

ctx.retry.

Here's an example:

tool_retry.py

from pydantic import BaseModel

from pydantic_ai import Agent, RunContext, ModelRetry

from fake_database import DatabaseConn

class ChatResult(BaseModel):

user_id: int

message: str

agent = Agent(

'openai:gpt-4o',

deps_type=DatabaseConn,

result_type=ChatResult,

)

@agent.tool(retries=2)

def get_user_by_name(ctx: RunContext[DatabaseConn], name: str) -> int:

"""Get a user's ID from their full name."""

print(name)

#> John

#> John Doe

user_id = ctx.deps.users.get(name=name)

if user_id is None:

raise ModelRetry(

f'No user found with name {name!r}, remember to provide their full name'

)

return user_id

result = agent.run_sync(

'Send a message to John Doe asking for coffee next week', deps=DatabaseConn()

)

print(result.data)

"""

user_id=123 message='Hello John, would you be free for coffee sometime next week? Let me know what works for you!'

"""

Model errors

If models behave unexpectedly (e.g., the retry limit is exceeded, or their API returns 503), agent runs will raise UnexpectedModelBehavior.

In these cases, capture_run_messages can be used to access the messages exchanged during the run to help diagnose the issue.

agent_model_errors.py

from pydantic_ai import Agent, ModelRetry, UnexpectedModelBehavior, capture_run_messages

agent = Agent('openai:gpt-4o')

@agent.tool_plain

def calc_volume(size: int) -> int:

if size == 42:

return size**3

else:

raise ModelRetry('Please try again.')

with capture_run_messages() as messages:

try:

result = agent.run_sync('Please get me the volume of a box with size 6.')

except UnexpectedModelBehavior as e:

print('An error occurred:', e)

#> An error occurred: Tool exceeded max retries count of 1

print('cause:', repr(e.__cause__))

#> cause: ModelRetry('Please try again.')

print('messages:', messages)

"""

messages:

[

ModelRequest(

parts=[

UserPromptPart(

content='Please get me the volume of a box with size 6.',

timestamp=datetime.datetime(...),

part_kind='user-prompt',

)

],

kind='request',

),

ModelResponse(

parts=[

ToolCallPart(

tool_name='calc_volume',

args={'size': 6},

tool_call_id='pyd_ai_tool_call_id',

part_kind='tool-call',

)

],

model_name='gpt-4o',

timestamp=datetime.datetime(...),

kind='response',

),

ModelRequest(

parts=[

RetryPromptPart(

content='Please try again.',

tool_name='calc_volume',

tool_call_id='pyd_ai_tool_call_id',

timestamp=datetime.datetime(...),

part_kind='retry-prompt',

)

],

kind='request',

),

ModelResponse(

parts=[

ToolCallPart(

tool_name='calc_volume',

args={'size': 6},

tool_call_id='pyd_ai_tool_call_id',

part_kind='tool-call',

)

],

model_name='gpt-4o',

timestamp=datetime.datetime(...),

kind='response',

),

]

"""

else:

print(result.data)

(This example is complete, it can be run "as is")

Note

If you call run, run_sync, or run_stream more than once within a single capture_run_messages context, messages will represent the messages exchanged during the first call only.

模型

PydanticAI 兼容多种模型,并内置了对以下模型提供者的支持功能:

- OpenAI

- Anthropic

- Gemini via VertexAI

- Ollama

- Groq

- Mistral

- Cohere

- Bedrock

查看与 OpenAI 兼容的模型,以获取更多关于如何使用支持 OpenAI SDK 的模型(如 OpenRouter 和 Grok(xAI))的示例信息。

您还可以添加对其他模型的支持功能。

PydanticAI 不仅提供了 TestModel 和 FunctionModel 用于测试和开发,而且这些功能也便于用户进行测试和开发。

使用每个模型提供者时,您需要配置您的本地环境,并确保已安装所有必要的软件包。

模型、接口及服务提供商

PydanticAI 通过一些关键术语来描述其与不同组件的交互机制:

- 模型:这代表您希望用于处理请求的具体 LLM 模型(例如,

gpt-4o,claude-3-5-sonnet-latest,gemini-1.5-flash等)。这个模型是处理您的提示并生成回复的核心。您需要将模型作为参数传递给接口。 - 接口:这里指的是一种用于遵循特定LLM API(通常是通过封装供应商提供的 SDK,例如 python SDK)的 PydanticAI 类。这些类实现了与供应商 SDK 无关的 API,确保一个 PydanticAI 代理可以轻松地适配不同供应商,无需修改其他代码,只需更换使用的接口即可。目前,接口类名称大致遵循

<VendorSdk>Model的格式,例如,我们有OpenAIModel、AnthropicModel、GeminiModel等。这些类很快将更名为<VendorSdk>Interface,以更好地体现这一术语。 - "源:这指的是专门处理与LLM供应商认证和连接的接口类。通过将非默认的供应商类作为参数传递给接口,你可以确保你的代理会向特定的端点发送请求,或者使用特定的认证方式(例如,你可以通过特定方式使用 Vertex 认证)。特别是,这是使用 AI 网关或提供与现有接口使用的 SDK 兼容的 API 的供应商的方法。"

简而言之,您选择一个模型,PydanticAI 会使用相应的接口类,并由提供商负责连接和认证到基础服务,使操作更加便捷。

OpenAI

安装

使用 OpenAI 模型,您可以选择安装 pydantic-ai 或者安装 pydantic-ai-slim ,并可选安装 openai 组:

pip install "pydantic-ai-slim[openai]"

uv add "pydantic-ai-slim[openai]"

配置

要使用 OpenAIModel 的主 API,请访问 platform.openai.com,按照指引一路寻找,直到找到生成 API 密钥的位置。

环境变量

一旦您获取了 API 密钥,您就可以将其设置为环境变量:

export OPENAI_API_KEY='your-api-key'

您可以通过名称直接使用 OpenAIModel :

openai_model_by_name.py

from pydantic_ai import Agent

agent = Agent('openai:gpt-4o')

...

直接使用模型名称初始化模型:

openai 模型初始化.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

model = OpenAIModel('gpt-4o')

agent = Agent(model)

...

默认情况下, OpenAIModel 会使用 OpenAIProvider ,并将 base_url 设置为 https://api.openai.com/v1 ,这样的用法是默认的。

provider 参数

您可以通过 provider 参数提供一个自定义的 Provider ,这样可以使描述更加清晰。

openai 模型提供者.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel('gpt-4o', provider=OpenAIProvider(api_key='your-api-key'))

agent = Agent(model)

...

自定义 OpenAI 客户端

同样可以通过 openai_client 参数接受自定义的 AsyncOpenAI 客户端,这样您就可以根据 OpenAI API 文档中的说明自定义 organization 、 project 、 base_url 等参数。

您还可以使用 AsyncAzureOpenAI 客户端来调用 Azure OpenAI API,这样表达更为自然。

openai_azure.py

from openai import AsyncAzureOpenAI

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

client = AsyncAzureOpenAI(

azure_endpoint='...',

api_version='2024-07-01-preview',

api_key='your-api-key',

)

model = OpenAIModel(

'gpt-4o',

provider=OpenAIProvider(openai_client=client),

)

agent = Agent(model)

...

OpenAI 响应 API

PydanticAI 支持通过 OpenAIResponsesModel 类调用 OpenAI 的 Responses API,使用起来更加方便。

openai_responses_model.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIResponsesModel

model = OpenAIResponsesModel('gpt-4o')

agent = Agent(model)

...

响应 API 内置了您可以直接使用的工具,无需自行构建:

- 优化翻译:允许模型在生成回复前先通过网络搜索获取最新资讯。

- 允许模型在生成回复前搜索您的文件,以便获取相关信息。

- 计算机使用:允许模型利用计算机为您代劳执行任务。

您可以使用 OpenAIResponsesModelSettings 类来利用这些内置工具,操作更便捷。

openai_responses 模型设置.py

from openai.types.responses import WebSearchToolParam

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIResponsesModel, OpenAIResponsesModelSettings

model_settings = OpenAIResponsesModelSettings(

openai_builtin_tools=[WebSearchToolParam(type='web_search_preview')],

)

model = OpenAIResponsesModel('gpt-4o')

agent = Agent(model=model, model_settings=model_settings)

result = agent.run_sync('What is the weather in Tokyo?')

print(result.data)

"""

As of 7:48 AM on Wednesday, April 2, 2025, in Tokyo, Japan, the weather is cloudy with a temperature of 53°F (12°C).

"""

你可以在 OpenAI API 文档中详细了解 Responses API 和 Chat Completions API 的区别,翻译更加自然易懂。

Anthropic 公司

安装

使用 AnthropicModel 模型,您可以选择安装 pydantic-ai 或者在安装 pydantic-ai-slim 时选择包含 anthropic 可选组件。

pip install "pydantic-ai-slim[anthropic]"

uv add "pydantic-ai-slim[anthropic]"

配置

要通过 Anthropic 的 API 使用,请前往 console.anthropic.com/settings/keys 页面生成 API 密钥。

包含所有可用的 Anthropic 模型列表。

环境变量

一旦您获取了 API 密钥,您就可以将其设置为环境变量:

export ANTHROPIC_API_KEY='your-api-key'

您可以通过名称直接使用 AnthropicModel :

anthropic_model_by_name.py

from pydantic_ai import Agent

agent = Agent('anthropic:claude-3-5-sonnet-latest')

...

直接使用模型名称初始化模型:

anthropic_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.anthropic import AnthropicModel

model = AnthropicModel('claude-3-5-sonnet-latest')

agent = Agent(model)

...

provider 参数

您可以通过 provider 参数提供一个自定义的 Provider ,这样可以使描述更加清晰。

anthropic 模型提供者.py

from pydantic_ai import Agent

from pydantic_ai.models.anthropic import AnthropicModel

from pydantic_ai.providers.anthropic import AnthropicProvider

model = AnthropicModel(

'claude-3-5-sonnet-latest', provider=AnthropicProvider(api_key='your-api-key')

)

agent = Agent(model)

...

自定义 HTTP 客户端

您可以用自定义的 AnthropicProvider 替换 httpx.AsyncClient :

anthropic_model_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.anthropic import AnthropicModel

from pydantic_ai.providers.anthropic import AnthropicProvider

custom_http_client = AsyncClient(timeout=30)

model = AnthropicModel(

'claude-3-5-sonnet-latest',

provider=AnthropicProvider(api_key='your-api-key', http_client=custom_http_client),

)

agent = Agent(model)

...

Gemini

安装

使用 GeminiModel 模型,只需安装 pydantic-ai 或 pydantic-ai-slim ,无需额外依赖。这样的表述更加自然易懂。

配置

通过 Google 的 Generative Language API,您可以使用 Google 的 Gemini 模型, generativelanguage.googleapis.com 。(经过润色,使翻译更加自然易懂)

包含通过此接口可用的所有 Gemini 模型,可供选择使用。

要使用 GeminiModel ,请访问 aistudio.google.com,并选择“创建 API 密钥”。这样的表述更加自然,易于理解。

环境变量

一旦您获取了 API 密钥,您就可以将其设置为环境变量:

export GEMINI_API_KEY=your-api-key

您可以通过名称直接使用 GeminiModel :

gemini_model_by_name.py

from pydantic_ai import Agent

agent = Agent('google-gla:gemini-2.0-flash')

...

备注

“ google-gla 提供者前缀表示 Google 生成式语言 API,用于 GeminiModel 。 google-vertex 与 Vertex AI 配合使用。”

直接使用模型名称和提供者来初始化模型:

gemini 模型初始化.py

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

model = GeminiModel('gemini-2.0-flash', provider='google-gla')

agent = Agent(model)

...

provider 参数

您可以通过 provider 参数提供一个自定义的 Provider ,这样可以使描述更加清晰。

gemini 模型提供者.py

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_gla import GoogleGLAProvider

model = GeminiModel(

'gemini-2.0-flash', provider=GoogleGLAProvider(api_key='your-api-key')

)

agent = Agent(model)

...

您还可以通过自定义 http_client 来对 GoogleGLAProvider 进行个性化设置:

gemini_model_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_gla import GoogleGLAProvider

custom_http_client = AsyncClient(timeout=30)

model = GeminiModel(

'gemini-2.0-flash',

provider=GoogleGLAProvider(api_key='your-api-key', http_client=custom_http_client),

)

agent = Agent(model)

...

Gemini via VertexAI

如果您是企业用户,建议您使用 google-vertex 提供程序,该程序通过 GeminiModel 实现,并使用 *-aiplatform.googleapis.com API。

包含通过此接口可用的所有 Gemini 模型,可供选择使用。

安装

使用 google-vertex 提供程序配合 GeminiModel 时,您需要安装 pydantic-ai ,或者安装包含 vertexai 可选组的 pydantic-ai-slim :

pip install "pydantic-ai-slim[vertexai]"

uv add "pydantic-ai-slim[vertexai]"

配置

与上文所述相比,该接口拥有众多优势:

- VertexAI API 提供了更多针对企业级应用准备保障的功能,表述更为自然。

- 您可以通过 VertexAI 购买预留的吞吐量,从而保证容量。

- 如果你在 GCP 环境中使用 PydanticAI,无需进行认证设置,它应该能够“直接运行”。

- 您可以选择使用哪个区域,这从监管角度考虑可能很重要,也可能有助于降低延迟。

本地开发时,你可能需要创建和配置一个“服务帐户”,这在我过去一直觉得非常痛苦,难以正确设置。以下是一个更自然、更易理解的版本:在进行本地开发时,您可能需要创建并配置一个“服务帐户”,我之前发现这非常棘手,很难做到位。

无论您选择哪种认证方式,您都需要确保您的 GCP 账户中已启用 VertexAI 功能。这样表述更为自然和易于理解。

应用程序默认凭证

如果您在 GCP 环境中运行 PydanticAI,或者已经安装并配置了 gcloud CLI 工具,那么您无需进行任何额外设置即可使用 VertexAIModel 。

使用 VertexAIModel ,若已配置应用程序默认凭证(例如,使用 gcloud ),则可直接操作:

vertexai 应用默认凭证.py

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

model = GeminiModel('gemini-2.0-flash', provider='google-vertex')

agent = Agent(model)

...

内部使用 google.auth.default() 从 google-auth 包中获取凭证。(经过优化,使翻译更加自然易懂)

不会失败,直到 agent.run()

因为 google.auth.default() 需要发起网络请求,可能会比较耗时,因此只有在调用 agent.run() 之后才会执行。

如果应用程序默认凭据未设置项目,您可能需要传递 project_id 参数给 GoogleVertexProvider 。如果传递 project_id 且与默认凭据设置的项目冲突,将会报错。

服务账号

If instead of application default credentials, you want to authenticate with a service account, you'll need to create a service account, add it to your GCP project (note: AFAIK this step is necessary even if you created the service account within the project), give that service account the "Vertex AI Service Agent" role, and download the service account JSON file.

一旦您拥有 JSON 文件,您就可以这样使用它:

vertexai_service_account.py

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_vertex import GoogleVertexProvider

model = GeminiModel(

'gemini-2.0-flash',

provider=GoogleVertexProvider(service_account_file='path/to/service-account.json'),

)

agent = Agent(model)

...

如果您已经将服务账户信息保存在内存中,可以直接将其作为字典传入:

vertexai_service_account.py

import json

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_vertex import GoogleVertexProvider

service_account_info = json.loads(

'{"type": "service_account", "project_id": "my-project-id"}'

)

model = GeminiModel(

'gemini-2.0-flash',

provider=GoogleVertexProvider(service_account_info=service_account_info),

)

agent = Agent(model)

...

自定义区域

无论您选择哪种认证方式,您都可以通过 region 参数指定请求发送到的具体区域,使表述更加自然易懂。

使用靠近您应用程序的服务区域可以降低延迟,这在遵守监管规定方面可能具有重要意义。

vertexai_region.py

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_vertex import GoogleVertexProvider

model = GeminiModel(

'gemini-2.0-flash', provider=GoogleVertexProvider(region='asia-east1')

)

agent = Agent(model)

...

您还可以通过自定义 http_client 来对 GoogleVertexProvider 进行个性化设置:

vertexai_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.gemini import GeminiModel

from pydantic_ai.providers.google_vertex import GoogleVertexProvider

custom_http_client = AsyncClient(timeout=30)

model = GeminiModel(

'gemini-2.0-flash',

provider=GoogleVertexProvider(region='asia-east1', http_client=custom_http_client),

)

agent = Agent(model)

...

Groq

安装

使用 GroqModel 功能,您需要安装 pydantic-ai ,或者安装 pydantic-ai-slim 并选择包含 groq 的可选组件:

pip install "pydantic-ai-slim[groq]"

uv add "pydantic-ai-slim[groq]"

配置

要使用 Groq 的 API,请访问 console.groq.com/keys,按照页面指示操作,直到找到生成 API 密钥的地方。

包含可用的 Groq 模型清单。

环境变量

一旦您获取了 API 密钥,您就可以将其设置为环境变量:

export GROQ_API_KEY='your-api-key'

您可以通过名称直接使用 GroqModel :

groq_model_by_name.py

from pydantic_ai import Agent

agent = Agent('groq:llama-3.3-70b-versatile')

...

直接使用模型名称初始化模型:

groq 模型初始化.py

from pydantic_ai import Agent

from pydantic_ai.models.groq import GroqModel

model = GroqModel('llama-3.3-70b-versatile')

agent = Agent(model)

...

provider 参数

您可以通过 provider 参数提供一个自定义的 Provider ,这样可以使描述更加清晰。

groq 模型提供者.py

from pydantic_ai import Agent

from pydantic_ai.models.groq import GroqModel

from pydantic_ai.providers.groq import GroqProvider

model = GroqModel(

'llama-3.3-70b-versatile', provider=GroqProvider(api_key='your-api-key')

)

agent = Agent(model)

...

您还可以通过自定义 httpx.AsyncHTTPClient 来对 GroqProvider 进行个性化设置:

groq_model_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.groq import GroqModel

from pydantic_ai.providers.groq import GroqProvider

custom_http_client = AsyncClient(timeout=30)

model = GroqModel(

'llama-3.3-70b-versatile',

provider=GroqProvider(api_key='your-api-key', http_client=custom_http_client),

)

agent = Agent(model)

...

Mistral

安装

使用 MistralModel 功能,您需要安装 pydantic-ai ,或者安装 pydantic-ai-slim 并选择包含 mistral 的可选组件:

pip install "pydantic-ai-slim[mistral]"

uv add "pydantic-ai-slim[mistral]"

配置

要使用 Mistral 的 API,请访问 console.mistral.ai/api-keys/页面,按照提示操作,直到找到生成 API 密钥的位置。

包含最受欢迎的 Mistral 模型清单。

环境变量

一旦您获取了 API 密钥,您就可以将其设置为环境变量:

export MISTRAL_API_KEY='your-api-key'

您可以通过名称直接使用 MistralModel :

mistral_model_by_name.py

from pydantic_ai import Agent

agent = Agent('mistral:mistral-large-latest')

...

直接使用模型名称初始化模型:

mistral 模型初始化.py

from pydantic_ai import Agent

from pydantic_ai.models.mistral import MistralModel

model = MistralModel('mistral-small-latest')

agent = Agent(model)

...

provider

您可以通过 provider 参数提供一个自定义的 Provider ,这样可以使描述更加清晰。

groq_model_provider.py

from pydantic_ai import Agent

from pydantic_ai.models.mistral import MistralModel

from pydantic_ai.providers.mistral import MistralProvider

model = MistralModel(

'mistral-large-latest', provider=MistralProvider(api_key='your-api-key')

)

agent = Agent(model)

...

You can also customize the provider with a custom httpx.AsyncHTTPClient:

groq_model_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.mistral import MistralModel

from pydantic_ai.providers.mistral import MistralProvider

custom_http_client = AsyncClient(timeout=30)

model = MistralModel(

'mistral-large-latest',

provider=MistralProvider(api_key='your-api-key', http_client=custom_http_client),

)

agent = Agent(model)

...

Cohere

安装

使用 CohereModel,您需要安装 pydantic-ai,或者安装带有 cohere 可选组的 [pydantic-ai-slim](https://ai.pydantic.dev/install/#slim-install):

pip install "pydantic-ai-slim[cohere]"

uv add "pydantic-ai-slim[cohere]"

配置

要通过 API 使用 Cohere,请访问 dashboard.cohere.com/api-keys 并按照指示操作,直到找到生成 API 密钥的地方。

CohereModelName 包含最受欢迎的 Cohere 模型列表。

环境变量

一旦您有了 API 密钥,您可以将它设置为环境变量:

export CO_API_KEY='your-api-key'

然后,您可以通过名称使用 CohereModel:

cohere_model_by_name.py

from pydantic_ai import Agent

agent = Agent('cohere:command')

...

或直接使用模型名称初始化模型:

cohere_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.cohere import CohereModel

model = CohereModel('command')

agent = Agent(model)

...

provider 参数

您可以通过 Provider 参数提供自定义的 provider 参数 :

cohere_model_provider.py

from pydantic_ai import Agent

from pydantic_ai.models.cohere import CohereModel

from pydantic_ai.providers.cohere import CohereProvider

model = CohereModel('command', provider=CohereProvider(api_key='your-api-key'))

agent = Agent(model)

...

您也可以使用自定义的 CohereProvider,并指定一个自定义的 http_client:

cohere_model_custom_provider.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.cohere import CohereModel

from pydantic_ai.providers.cohere import CohereProvider

custom_http_client = AsyncClient(timeout=30)

model = CohereModel(

'command',

provider=CohereProvider(api_key='your-api-key', http_client=custom_http_client),

)

agent = Agent(model)

...

Bedrock

安装

要使用 BedrockConverseModel,您需要安装 pydantic-ai,或者安装带有 bedrock 可选组的 pydantic-ai-slim:

pip install "pydantic-ai-slim[bedrock]"

uv add "pydantic-ai-slim[bedrock]"

配置

使用 AWS Bedrock,您需要一个已启用 Bedrock 且具有相应凭证的 AWS 账户。您可以直接使用 AWS 凭证或预配置的 boto3 客户端。

BedrockModelName 包含可用的 Bedrock 模型列表,包括来自 Anthropic、Amazon、Cohere、Meta 和 Mistral 的模型。

环境变量

您可以将您的 AWS 凭证设置为环境变量( 以及其他选项 :

export AWS_ACCESS_KEY_ID='your-access-key'

export AWS_SECRET_ACCESS_KEY='your-secret-key'

export AWS_DEFAULT_REGION='us-east-1' # or your preferred region

然后您可以通过名称使用 BedrockConverseModel:

bedrock_model_by_name.py

from pydantic_ai import Agent

agent = Agent('bedrock:anthropic.claude-3-sonnet-20240229-v1:0')

...

或者直接使用模型名称初始化模型:

bedrock_model_by_name.py

from pydantic_ai import Agent

from pydantic_ai.models.bedrock import BedrockConverseModel

model = BedrockConverseModel('anthropic.claude-3-sonnet-20240229-v1:0')

agent = Agent(model)

...

provider 参数

您可以通过“provider”参数提供一个自定义的 BedrockProvider。这在您想直接指定凭据或使用自定义 boto3 客户端时很有用。

bedrock_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.bedrock import BedrockConverseModel

from pydantic_ai.providers.bedrock import BedrockProvider

# Using AWS credentials directly

model = BedrockConverseModel(

'anthropic.claude-3-sonnet-20240229-v1:0',

provider=BedrockProvider(

region_name='us-east-1',

aws_access_key_id='your-access-key',

aws_secret_access_key='your-secret-key',

),

)

agent = Agent(model)

...

您也可以传递一个预配置的 boto3 客户端:

bedrock_model_boto3.py

import boto3

from pydantic_ai import Agent

from pydantic_ai.models.bedrock import BedrockConverseModel

from pydantic_ai.providers.bedrock import BedrockProvider

# Using a pre-configured boto3 client

bedrock_client = boto3.client('bedrock-runtime', region_name='us-east-1')

model = BedrockConverseModel(

'anthropic.claude-3-sonnet-20240229-v1:0',

provider=BedrockProvider(bedrock_client=bedrock_client),

)

agent = Agent(model)

...

兼容 OpenAI 的模型

许多模型与 OpenAI API 兼容,因此可以使用 PydanticAI 中的 OpenAIModel。在开始之前,请查看 OpenAI 部分以获取安装和配置说明。

要使用另一个与 OpenAI 兼容的 API,您可以使用base_url以及来自 OpenAIProvider 的 api_key参数:

deepseek_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'model_name',

provider=OpenAIProvider(

base_url='https://<openai-compatible-api-endpoint>.com', api_key='your-api-key'

),

)

agent = Agent(model)

...

您还可以使用带有自定义提供器类的 provider 参数,例如 DeepSeekProvider

deepseek_model_init_provider_class.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.deepseek import DeepSeekProvider

model = OpenAIModel(

'deepseek-chat',

provider=DeepSeekProvider(api_key='your-deepseek-api-key'),

)

agent = Agent(model)

...

您也可以使用自定义的 http_client 来定制任何提供者:

deepseek_model_init_provider_custom.py

from httpx import AsyncClient

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.deepseek import DeepSeekProvider

custom_http_client = AsyncClient(timeout=30)

model = OpenAIModel(

'deepseek-chat',

provider=DeepSeekProvider(

api_key='your-deepseek-api-key', http_client=custom_http_client

),

)

agent = Agent(model)

...

Ollama

使用 Ollama,您必须首先下载 Ollama 客户端,然后使用 Ollama 模型库下载一个模型。

在尝试向其发送请求时,您还必须确保 Ollama 服务器正在运行。有关更多信息,请参阅 Ollama 文档 。

示例本地使用

安装 ollama 后,您可以使用您想要的模型运行服务器:

终端运行 ollama

ollama run llama3.2

(如果您尚未下载,此命令将拉取 llama3.2 模型)

然后运行你的代码,这里有一个最小示例:

ollama_example.py

from pydantic import BaseModel

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

class CityLocation(BaseModel):

city: str

country: str

ollama_model = OpenAIModel(

model_name='llama3.2', provider=OpenAIProvider(base_url='http://localhost:11434/v1')

)

agent = Agent(ollama_model, result_type=CityLocation)

result = agent.run_sync('Where were the olympics held in 2012?')

print(result.data)

#> city='London' country='United Kingdom'

print(result.usage())

"""

Usage(requests=1, request_tokens=57, response_tokens=8, total_tokens=65, details=None)

"""

使用远程服务器示例

ollama_example_with_remote_server.py

from pydantic import BaseModel

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

ollama_model = OpenAIModel(

model_name='qwen2.5-coder:7b',

provider=OpenAIProvider(base_url='http://192.168.1.74:11434/v1'),

)

class CityLocation(BaseModel):

city: str

country: str

agent = Agent(model=ollama_model, result_type=CityLocation)

result = agent.run_sync('Where were the olympics held in 2012?')

print(result.data)

#> city='London' country='United Kingdom'

print(result.usage())

"""

Usage(requests=1, request_tokens=57, response_tokens=8, total_tokens=65, details=None)

"""

Azure AI Foundry

如果您想使用 Azure AI Foundry 作为您的提供商,您可以通过使用 AzureProvider 类来实现。

azure_provider_example.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.azure import AzureProvider

model = OpenAIModel(

'gpt-4o',

provider=AzureProvider(

azure_endpoint='your-azure-endpoint',

api_version='your-api-version',

api_key='your-api-key',

),

)

agent = Agent(model)

...

OpenRouter

要使用 OpenRouter,首先在 openrouter.ai/keys 创建一个 API 密钥。

获取 API 密钥后,您可以使用它与 OpenAIProvider:

openrouter_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'anthropic/claude-3.5-sonnet',

provider=OpenAIProvider(

base_url='https://openrouter.ai/api/v1',

api_key='your-openrouter-api-key',

),

)

agent = Agent(model)

...

Grok(xAI)

前往 xAI API 控制台 创建 API 密钥。一旦您有了 API 密钥,就可以使用它与 OpenAIProvider:

grok_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'grok-2-1212',

provider=OpenAIProvider(base_url='https://api.x.ai/v1', api_key='your-xai-api-key'),

)

agent = Agent(model)

...

Perplexity

遵循Perplexity入门指南 指南创建 API 密钥。然后,您可以使用以下方式查询困惑度 API:

perplexity_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'sonar-pro',

provider=OpenAIProvider(

base_url='https://api.perplexity.ai',

api_key='your-perplexity-api-key',

),

)

agent = Agent(model)

...

Fireworks AI

前往 Fireworks.AI 并在您的账户设置中创建一个 API 密钥。一旦您有了 API 密钥,您就可以使用它与 OpenAIProvider:

fireworks_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'accounts/fireworks/models/qwq-32b', # model library available at https://fireworks.ai/models

provider=OpenAIProvider(

base_url='https://api.fireworks.ai/inference/v1',

api_key='your-fireworks-api-key',

),

)

agent = Agent(model)

...

Together AI

前往 Together.ai 并在你的账户设置中创建一个 API 密钥。一旦你有了 API 密钥,你就可以使用它与 OpenAIProvider:

together_model_init.py

from pydantic_ai import Agent

from pydantic_ai.models.openai import OpenAIModel

from pydantic_ai.providers.openai import OpenAIProvider

model = OpenAIModel(

'meta-llama/Llama-3.3-70B-Instruct-Turbo-Free', # model library available at https://www.together.ai/models

provider=OpenAIProvider(

base_url='https://api.together.xyz/v1',

api_key='your-together-api-key',

),

)

agent = Agent(model)

...

实现自定义模型

要实现支持尚未支持的模式,您需要继承模型抽象基类。

对于流式传输,您还需要实现以下抽象基类:

最佳的起点是审查现有实现的源代码,例如 OpenAIModel。

关于何时接受向 PydanticAI 添加新模型的贡献,请参阅贡献指南的详细信息。

回退

您可以使用 FallbackModel 尝试多个模型,直到其中一个返回成功的结果。在底层,如果当前模型返回 4xx 或 5xx 状态码,PydanticAI 会自动切换到下一个模型。

在以下示例中,代理首先向 OpenAI 模型发出请求(由于 API 密钥无效而失败),然后回退到 Anthropic 模型。

fallback_model.py

from pydantic_ai import Agent

from pydantic_ai.models.anthropic import AnthropicModel

from pydantic_ai.models.fallback import FallbackModel

from pydantic_ai.models.openai import OpenAIModel

openai_model = OpenAIModel('gpt-4o')

anthropic_model = AnthropicModel('claude-3-5-sonnet-latest')

fallback_model = FallbackModel(openai_model, anthropic_model)

agent = Agent(fallback_model)

response = agent.run_sync('What is the capital of France?')

print(response.data)

#> Paris

print(response.all_messages())

"""

[

ModelRequest(

parts=[

UserPromptPart(

content='What is the capital of France?',

timestamp=datetime.datetime(...),

part_kind='user-prompt',

)

],

kind='request',

),

ModelResponse(

parts=[TextPart(content='Paris', part_kind='text')],

model_name='claude-3-5-sonnet-latest',

timestamp=datetime.datetime(...),

kind='response',

),

]

"""

上述《ModelResponse》消息在《model_name》字段中指示结果是由 Anthropic 模型返回的,该模型是《FallbackModel》中指定的第二个模型。

注意

每个模型的选项应单独配置。例如,base_url、api_key 以及自定义客户端应在每个模型本身上设置,而不是在 FallbackModel 上设置。

在下一个示例中,我们展示了 FallbackModel 的异常处理能力。如果所有模型都失败,则会引发一个包含在 FallbackExceptionGroup 中的异常,该异常包含在 run 执行过程中遇到的全部异常。

fallback_model_failure.py

from pydantic_ai import Agent

from pydantic_ai.exceptions import ModelHTTPError

from pydantic_ai.models.anthropic import AnthropicModel

from pydantic_ai.models.fallback import FallbackModel

from pydantic_ai.models.openai import OpenAIModel

openai_model = OpenAIModel('gpt-4o')

anthropic_model = AnthropicModel('claude-3-5-sonnet-latest')

fallback_model = FallbackModel(openai_model, anthropic_model)

agent = Agent(fallback_model)

try:

response = agent.run_sync('What is the capital of France?')

except* ModelHTTPError as exc_group:

for exc in exc_group.exceptions:

print(exc)

Since except* is only supported in Python 3.11+, we use the exceptiongroup backport package for earlier Python versions:

fallback_model_failure.py

from exceptiongroup import catch

from pydantic_ai import Agent

from pydantic_ai.exceptions import ModelHTTPError

from pydantic_ai.models.anthropic import AnthropicModel

from pydantic_ai.models.fallback import FallbackModel

from pydantic_ai.models.openai import OpenAIModel

def model_status_error_handler(exc_group: BaseExceptionGroup) -> None:

for exc in exc_group.exceptions:

print(exc)

openai_model = OpenAIModel('gpt-4o')

anthropic_model = AnthropicModel('claude-3-5-sonnet-latest')

fallback_model = FallbackModel(openai_model, anthropic_model)

agent = Agent(fallback_model)

with catch({ModelHTTPError: model_status_error_handler}):

response = agent.run_sync('What is the capital of France?')

默认情况下,FallbackModel 只有在当前模型抛出异常时才会切换到下一个模型。 ModelHTTPError。您可以通过向 FallbackModel 构造函数传递自定义的 fallback_on 参数来自定义此行为。

依赖

PydanticAI 通过依赖注入系统为您的智能体系统中的提示、工具和结果验证器提供数据和服务,使系统更加灵活和高效。

与 PydanticAI 的设计理念相契合,我们的依赖管理系统致力于采用 Python 开发领域的现有最佳实践,而非创造晦涩的“魔法”。这样做可以使依赖项更加类型安全、易于理解、便于测试,并最终简化生产部署过程。

定义依赖关系

依赖可以是任何 Python 类型。在简单场景下,你可能只需传递单个对象作为依赖(例如,一个 HTTP 连接),但如果依赖项包含多个对象,数据类通常是一个更方便的容器。

这里是一个关于如何定义需要依赖项的代理的示例。

(注意:本示例中并未实际使用依赖项,详情请参考“访问依赖项”部分)

unused_dependencies.py

from dataclasses import dataclass

import httpx

from pydantic_ai import Agent

@dataclass

class MyDeps:

api_key: str

http_client: httpx.AsyncClient

agent = Agent(

'openai:gpt-4o',

deps_type=MyDeps,

)

async def main():

async with httpx.AsyncClient() as client:

deps = MyDeps('foobar', client)

result = await agent.run(

'Tell me a joke.',

deps=deps,

)

print(result.data)

#> Did you hear about the toothpaste scandal? They called it Colgate.

(本示例完整,可直接运行——运行时需添加 asyncio.run(main()) 才能执行 main )

访问依赖项

依赖项通过 RunContext 类型进行访问,这应该是系统提示函数等中的第一个参数。

system_prompt_dependencies.py

from dataclasses import dataclass

import httpx

from pydantic_ai import Agent, RunContext

@dataclass

class MyDeps:

api_key: str

http_client: httpx.AsyncClient

agent = Agent(

'openai:gpt-4o',

deps_type=MyDeps,

)

@agent.system_prompt

async def get_system_prompt(ctx: RunContext[MyDeps]) -> str:

response = await ctx.deps.http_client.get(

'https://example.com',

headers={'Authorization': f'Bearer {ctx.deps.api_key}'},

)

response.raise_for_status()

return f'Prompt: {response.text}'

async def main():

async with httpx.AsyncClient() as client:

deps = MyDeps('foobar', client)

result = await agent.run('Tell me a joke.', deps=deps)

print(result.data)

#> Did you hear about the toothpaste scandal? They called it Colgate.

(本示例完整,可直接运行——运行时需添加 asyncio.run(main()) 才能执行 main )

异步与同步依赖

系统提示函数、功能工具以及结果验证器均运行于代理执行的异步环境中。

如果这些函数不是协程(例如 async def ),它们会在线程池中使用 run_in_executor 进行调用,因此在使用执行 IO 操作的 async 方法时,稍微更可取一些。不过,同步依赖项也能正常工作。

run 与 run_sync 的对比以及异步依赖和同步依赖的区别

无论您使用同步依赖还是异步依赖,这都与您是否使用 run 或 run_sync 无关—— run_sync 只是对 run 的封装,代理总是以异步方式运行。

以下是与上面相同的示例,但使用了同步依赖:

同步依赖.py

from dataclasses import dataclass

import httpx

from pydantic_ai import Agent, RunContext

@dataclass

class MyDeps:

api_key: str

http_client: httpx.Client

agent = Agent(

'openai:gpt-4o',

deps_type=MyDeps,

)

@agent.system_prompt

def get_system_prompt(ctx: RunContext[MyDeps]) -> str:

response = ctx.deps.http_client.get(

'https://example.com', headers={'Authorization': f'Bearer {ctx.deps.api_key}'}

)

response.raise_for_status()

return f'Prompt: {response.text}'

async def main():

deps = MyDeps('foobar', httpx.Client())

result = await agent.run(

'Tell me a joke.',

deps=deps,

)

print(result.data)

#> Did you hear about the toothpaste scandal? They called it Colgate.

(本示例完整,可直接运行——运行时需添加 asyncio.run(main()) 才能执行 main )

完整示例

除了系统提示之外,依赖关系还可以应用于工具和结果验证器,表述更为自然流畅。

full_example.py

from dataclasses import dataclass

import httpx

from pydantic_ai import Agent, ModelRetry, RunContext

@dataclass

class MyDeps:

api_key: str

http_client: httpx.AsyncClient

agent = Agent(

'openai:gpt-4o',

deps_type=MyDeps,

)

@agent.system_prompt

async def get_system_prompt(ctx: RunContext[MyDeps]) -> str:

response = await ctx.deps.http_client.get('https://example.com')

response.raise_for_status()

return f'Prompt: {response.text}'

@agent.tool

async def get_joke_material(ctx: RunContext[MyDeps], subject: str) -> str:

response = await ctx.deps.http_client.get(

'https://example.com#jokes',

params={'subject': subject},

headers={'Authorization': f'Bearer {ctx.deps.api_key}'},

)

response.raise_for_status()

return response.text

@agent.result_validator

async def validate_result(ctx: RunContext[MyDeps], final_response: str) -> str:

response = await ctx.deps.http_client.post(

'https://example.com#validate',

headers={'Authorization': f'Bearer {ctx.deps.api_key}'},

params={'query': final_response},

)

if response.status_code == 400:

raise ModelRetry(f'invalid response: {response.text}')

response.raise_for_status()

return final_response

async def main():

async with httpx.AsyncClient() as client:

deps = MyDeps('foobar', client)

result = await agent.run('Tell me a joke.', deps=deps)

print(result.data)

#> Did you hear about the toothpaste scandal? They called it Colgate.

(本示例完整,可直接运行——运行时需添加 asyncio.run(main()) 才能执行 main )

覆盖依赖

测试智能体时,能够根据需要自定义依赖项,这一点非常实用。

虽然有时可以在单元测试中直接调用代理,但也可以在调用应用程序代码时覆盖依赖,进而调用代理。这样可以使代码更加灵活,更易于测试。

这是通过代理上的 override 方法完成的,表述更为自然易懂。

joke_app.py

from dataclasses import dataclass

import httpx

from pydantic_ai import Agent, RunContext

@dataclass

class MyDeps:

api_key: str

http_client: httpx.AsyncClient

async def system_prompt_factory(self) -> str:

response = await self.http_client.get('https://example.com')

response.raise_for_status()

return f'Prompt: {response.text}'

joke_agent = Agent('openai:gpt-4o', deps_type=MyDeps)

@joke_agent.system_prompt

async def get_system_prompt(ctx: RunContext[MyDeps]) -> str:

return await ctx.deps.system_prompt_factory()

async def application_code(prompt: str) -> str:

...

...

# now deep within application code we call our agent

async with httpx.AsyncClient() as client:

app_deps = MyDeps('foobar', client)

result = await joke_agent.run(prompt, deps=app_deps)

return result.data

(本示例完整,可以直接运行)

test_joke_app.py

from joke_app import MyDeps, application_code, joke_agent

class TestMyDeps(MyDeps):

async def system_prompt_factory(self) -> str:

return 'test prompt'

async def test_application_code():

test_deps = TestMyDeps('test_key', None)

with joke_agent.override(deps=test_deps):

joke = await application_code('Tell me a joke.')

assert joke.startswith('Did you hear about the toothpaste scandal?')

示例

以下示例展示了如何在 PydanticAI 中使用依赖项:

功能工具

函数工具提供了一种机制,让模型能够获取额外信息,从而辅助生成响应。这样的翻译更加自然、易懂。

当无法将所有必要的上下文信息输入到系统提示中,或者希望通过将生成响应的部分逻辑委托给其他(不一定是 AI)工具来提高代理行为的确定性和可靠性时,这些工具非常有用。

函数工具与 RAG 的对比分析

函数工具是 RAG(检索增强生成)的核心,它们通过让模型请求额外信息来增强模型的能力,使翻译更加自然易懂。

PydanticAI 工具与 RAG 的主要区别在于,RAG 通常指的是向量搜索,而 PydanticAI 工具则具有更广泛的通用性。(请注意,我们未来可能会加入对向量搜索的支持,特别是生成嵌入的 API。详见 #58)

将工具注册到代理中,有多种途径可供选择:

- 通过

@agent.tool装饰器 —— 适用于需要访问代理上下文的工具 - 通过

@agent.tool_plain装饰器 —— 适用于无需访问代理上下文的工具 - 通过

Agent的tools关键字参数,可以传入普通函数或Tool的实例

@agent.tool 被视为默认装饰器,因为在大多数情况下,工具都需要访问代理的上下文信息。

这里是一个同时使用两个示例的例子:

dice_game.py

import random

from pydantic_ai import Agent, RunContext

agent = Agent(

'google-gla:gemini-1.5-flash',

deps_type=str,

system_prompt=(

"You're a dice game, you should roll the die and see if the number "

"you get back matches the user's guess. If so, tell them they're a winner. "

"Use the player's name in the response."

),

)

@agent.tool_plain

def roll_die() -> str:

"""Roll a six-sided die and return the result."""

return str(random.randint(1, 6))

@agent.tool

def get_player_name(ctx: RunContext[str]) -> str:

"""Get the player's name."""

return ctx.deps

dice_result = agent.run_sync('My guess is 4', deps='Anne')

print(dice_result.data)

#> Congratulations Anne, you guessed correctly! You're a winner!

(本示例完整,可以直接运行)

让我们从那款游戏的消息中打印出来,看看具体发生了什么情况:

dice_game_messages.py

from dice_game import dice_result

print(dice_result.all_messages())

"""

[

ModelRequest(

parts=[

SystemPromptPart(

content="You're a dice game, you should roll the die and see if the number you get back matches the user's guess. If so, tell them they're a winner. Use the player's name in the response.",

timestamp=datetime.datetime(...),

dynamic_ref=None,

part_kind='system-prompt',

),

UserPromptPart(

content='My guess is 4',

timestamp=datetime.datetime(...),

part_kind='user-prompt',

),

],

kind='request',

),

ModelResponse(

parts=[

ToolCallPart(

tool_name='roll_die',

args={},

tool_call_id='pyd_ai_tool_call_id',

part_kind='tool-call',

)

],

model_name='gemini-1.5-flash',

timestamp=datetime.datetime(...),

kind='response',

),

ModelRequest(

parts=[

ToolReturnPart(

tool_name='roll_die',

content='4',

tool_call_id='pyd_ai_tool_call_id',

timestamp=datetime.datetime(...),

part_kind='tool-return',

)

],

kind='request',

),

ModelResponse(

parts=[

ToolCallPart(

tool_name='get_player_name',

args={},

tool_call_id='pyd_ai_tool_call_id',

part_kind='tool-call',

)

],

model_name='gemini-1.5-flash',

timestamp=datetime.datetime(...),

kind='response',

),

ModelRequest(

parts=[

ToolReturnPart(

tool_name='get_player_name',

content='Anne',

tool_call_id='pyd_ai_tool_call_id',

timestamp=datetime.datetime(...),

part_kind='tool-return',

)

],

kind='request',

),

ModelResponse(

parts=[

TextPart(

content="Congratulations Anne, you guessed correctly! You're a winner!",

part_kind='text',

)

],

model_name='gemini-1.5-flash',

timestamp=datetime.datetime(...),

kind='response',

),

]

"""

我们可以用图表来展示这一点,更直观易懂。

通过关键字参数注册函数工具

除了使用装饰器外,我们还可以通过构造函数的 tools 参数来注册工具。这样做可以方便地重用工具,并且能够对工具进行更精细的控制。

dice_game_tool_kwarg.py

import random

from pydantic_ai import Agent, RunContext, Tool

system_prompt = """\

You're a dice game, you should roll the die and see if the number

you get back matches the user's guess. If so, tell them they're a winner.

Use the player's name in the response.

"""

def roll_die() -> str:

"""Roll a six-sided die and return the result."""

return str(random.randint(1, 6))

def get_player_name(ctx: RunContext[str]) -> str:

"""Get the player's name."""

return ctx.deps

agent_a = Agent(

'google-gla:gemini-1.5-flash',

deps_type=str,

tools=[roll_die, get_player_name],

system_prompt=system_prompt,

)

agent_b = Agent(

'google-gla:gemini-1.5-flash',

deps_type=str,

tools=[

Tool(roll_die, takes_ctx=False),

Tool(get_player_name, takes_ctx=True),

],

system_prompt=system_prompt,

)

dice_result = {}

dice_result['a'] = agent_a.run_sync('My guess is 6', deps='Yashar')

dice_result['b'] = agent_b.run_sync('My guess is 4', deps='Anne')

print(dice_result['a'].data)

#> Tough luck, Yashar, you rolled a 4. Better luck next time.

print(dice_result['b'].data)

#> Congratulations Anne, you guessed correctly! You're a winner!

(本示例完整,可以直接运行)

函数工具与结构化结果对比分析

正如其名称所暗示的,功能工具通过使用模型的“工具”或“函数”API 来告知模型可以调用哪些功能。工具或函数还用于定义结构化响应的格式,因此一个模型可能能够访问多个工具,其中一些工具会调用功能工具,而另一些则会在执行完毕后返回结果。

函数工具与模式

函数参数是从函数签名中提取的,除了 RunContext 之外的所有参数都用于构建该工具调用的模式。这样的描述更加自然,易于理解。

更好的是,PydanticAI 不仅从函数中提取文档字符串,还利用 griffe 从文档字符串中提取参数描述,并将其加入到模式中,使功能更加强大。

Griffe 支持从 google 、 numpy 和 sphinx 风格的文档字符串中提取参数描述。PydanticAI 会根据文档字符串自动推断使用格式,但您也可以通过 docstring_format 来明确指定。同时,您还可以通过设置 require_parameter_descriptions=True 来强制参数要求。如果缺少参数描述,将会抛出 UserError 异常。

为了展示工具的架构,这里我们使用 FunctionModel 来打印模型接收到的架构信息:

tool_schema.py

from pydantic_ai import Agent

from pydantic_ai.messages import ModelMessage, ModelResponse, TextPart

from pydantic_ai.models.function import AgentInfo, FunctionModel

agent = Agent()

@agent.tool_plain(docstring_format='google', require_parameter_descriptions=True)

def foobar(a: int, b: str, c: dict[str, list[float]]) -> str:

"""Get me foobar.

Args:

a: apple pie

b: banana cake

c: carrot smoothie

"""

return f'{a} {b} {c}'

def print_schema(messages: list[ModelMessage], info: AgentInfo) -> ModelResponse:

tool = info.function_tools[0]

print(tool.description)

#> Get me foobar.

print(tool.parameters_json_schema)

"""

{

'additionalProperties': False,

'properties': {

'a': {'description': 'apple pie', 'type': 'integer'},

'b': {'description': 'banana cake', 'type': 'string'},

'c': {

'additionalProperties': {'items': {'type': 'number'}, 'type': 'array'},

'description': 'carrot smoothie',

'type': 'object',

},

},

'required': ['a', 'b', 'c'],

'type': 'object',

}

"""

return ModelResponse(parts=[TextPart('foobar')])

agent.run_sync('hello', model=FunctionModel(print_schema))

(本示例完整,可以直接运行)

工具的返回类型可以是 Pydantic 能够序列化为 JSON 的任何类型。例如,一些模型(如 Gemini)支持半结构化返回值,而另一些则期望文本输入(如 OpenAI)。即使模型期望字符串,返回的 Python 对象也会被序列化为 JSON。这样的设计使得工具能够灵活地处理不同类型的返回值,同时确保数据的一致性和可序列化性。

如果一个工具的单个参数可以用 JSON 模式(例如 dataclass、TypedDict、pydantic 模型)表示,则该工具的模式将简化为仅包含该对象。

这里是一个示例,我们使用 TestModel.last_model_request_parameters 来检查将要传递给模型的工具模式,翻译更加自然和易于理解。

single_parameter_tool.py

from pydantic import BaseModel

from pydantic_ai import Agent

from pydantic_ai.models.test import TestModel

agent = Agent()

class Foobar(BaseModel):

"""This is a Foobar"""

x: int

y: str

z: float = 3.14

@agent.tool_plain

def foobar(f: Foobar) -> str:

return str(f)

test_model = TestModel()

result = agent.run_sync('hello', model=test_model)

print(result.data)

#> {"foobar":"x=0 y='a' z=3.14"}

print(test_model.last_model_request_parameters.function_tools)

"""

[

ToolDefinition(

name='foobar',

description='This is a Foobar',

parameters_json_schema={

'properties': {

'x': {'type': 'integer'},

'y': {'type': 'string'},

'z': {'default': 3.14, 'type': 'number'},

},

'required': ['x', 'y'],

'title': 'Foobar',

'type': 'object',

},

outer_typed_dict_key=None,

)

]

"""

(本示例完整,可以直接运行)

动态函数工具

工具可以可选地通过另一个函数定义: prepare ,在运行过程中的每个步骤调用,用于自定义传递给模型的工具定义,或者在该步骤中完全省略工具。这样的翻译更加自然,易于理解。

可以通过 prepare 参数将方法注册到任何工具注册机制中:

@agent.tool装饰器(更自然的翻译)@agent.tool_plain装饰器(更自然的翻译)Tool数据类

“ prepare 方法,应属于 ToolPrepareFunc 类型,一个接受 RunContext 和预构建的 ToolDefinition 的函数,应返回该 ToolDefinition 并可能修改它,或返回新的 ToolDefinition ,或者返回 None 以表示该工具不应为此步骤进行注册。”

这里介绍了一个简单的方法,只有当依赖项的值为 42 时,才会包含该工具。

与前一个示例相同,我们通过使用 TestModel 来展示功能,而不实际调用模型。

tool_only_if_42.py

from typing import Union

from pydantic_ai import Agent, RunContext

from pydantic_ai.tools import ToolDefinition

agent = Agent('test')

async def only_if_42(

ctx: RunContext[int], tool_def: ToolDefinition

) -> Union[ToolDefinition, None]:

if ctx.deps == 42:

return tool_def

@agent.tool(prepare=only_if_42)

def hitchhiker(ctx: RunContext[int], answer: str) -> str:

return f'{ctx.deps} {answer}'

result = agent.run_sync('testing...', deps=41)

print(result.data)

#> success (no tool calls)

result = agent.run_sync('testing...', deps=42)

print(result.data)

#> {"hitchhiker":"42 a"}

(本示例完整,可以直接运行)

这里有一个更复杂的例子,我们将根据 deps 的值来调整 name 参数的描述,使其更加自然易懂。

为了增加多样性,我们采用 Tool 数据类来创建这个工具,使翻译更加自然易懂。

customize_name.py